Are you ready to ace your automation tester/automation testing job interview?

Ditch those generic question lists and dive into ours! We’ve analyzed real-world interviews to bring you 75 targeted questions that test your skills and problem-solving mindset.

Need a quick refresher? Our YouTube video breaks down the top 50 questions, helping you stay sharp on the go. Let’s nail this interview together!.

Some Interview Tips For Test Automation Job Interview

Psychological

- Demonstrate a problem-solving mindset: Employers want automation testers who see challenges as puzzles to solve. Showcase how you break down problems systematically, and enjoy finding streamlined solutions.

- Exhibit a ‘quality first’ attitude: Convey that preventing defects before they reach end-users is a core motivator. This aligns you with their desire to reduce costs and improve user experience.

- Project adaptability: In the ever-evolving world of testing, emphasize how you quickly learn new tools, and are flexible to changing requirements and methodologies.

Organizational

- Frame your experience as collaborative: Highlight projects where you worked effectively with developers and other testers, showing you understand the value of teamwork in the software development lifecycle.

- Communicate impact: Don’t just list tasks you did, quantify the effect of your automation efforts (e.g., “Implemented test suite that reduced regression cycle by 30%”).

- Alignment with company culture: Research the company’s values and work style. Subtly tailor examples to match their priorities (agile vs. traditional, speed vs. thoroughness, etc.)

Additional Tips

Research the Company:

- Understand the company culture, values, and projects related to automation testing.

- Tailor your answers to align with the company’s objectives and challenges.

Ask Thoughtful Questions:

- Show your interest in the company and the role by asking insightful questions about the automation testing processes, team dynamics, and future projects.

- This demonstrates your engagement and commitment to understanding the company’s needs.

Top automation testing interview questions in 2024

#1 Why do you think we need Automation Testing?

- “I strongly believe in the value of automation testing for several key reasons.

- First and foremost, it speeds up our development process considerably. We can execute far more tests in the same timeframe, identifying issues early on.

- This means faster fixes, fewer delays, and ultimately getting new features into the hands of our users sooner.

- Automation makes our product far more reliable. Tests run consistently every time, giving us a level of trust that manual testing alone can’t match, especially as our applications grow.

- Automated suites scale effortlessly with the code, guaranteeing that reliability is never compromised.

- From a cost perspective, while an initial investment is needed, automation quickly starts paying for itself. Think about the time developers save rerunning regression tests, the faster turnaround on bug fixes, and the prevention of expensive production failures.

- Beyond that, automation lets our QA team be strategic. Instead of repeating the same basic tests, they can focus on exploratory testing, digging into intricate user flows, and edge cases where human analysis is truly needed. This ensures more comprehensive, thoughtful testing.

- Automation changes how we think about development. It encourages ‘design for testability’, with developers writing unit tests alongside their code.

- This creates more robust systems from the get-go, preventing surprises later. It fits perfectly with modern DevOps practices, allowing us to test continuously and iterate quickly, a real edge in a competitive market.”

“I use a few key criteria to determine if a test is a good candidate for automation:

- Repetition: How often will this test need to be run? Automation excels with tests executed across multiple builds or with varying data sets.

- Stability: Tests for unchanging, mature features are ideal for automation, minimizing maintenance overhead.

- Risk: Automating tests covering critical, high-risk functionalities provides a valuable safety net against regressions.

- Complexity: Time-consuming or error-prone manual tests significantly benefit from automation’s precision and speed.

#3 What are the best practices for maintaining automation test scripts?

“To ensure a robust and adaptable automation test suite, I adhere to a number of core principles:

-

Page Object Model (POM): I firmly believe in the POM pattern. By encapsulating UI element locators separately from the test logic, I introduce a layer of abstraction that significantly increases maintainability. A centralized object repository means changes to the UI often only require updates in a single location, dramatically reducing the impact on the wider test suite.

-

Modular Design & Reusability: I break down test scripts into reusable functions and components. This promotes code efficiency, prevents redundancy, and makes it simple to update individual functionalities without disrupting the entire suite.

-

Meaningful Naming Conventions & Comments: Clear, descriptive naming for variables, functions, and tests, along with concise comments, ensure the code is self-documenting. This is crucial not only for my own understanding but also for streamlined team collaboration and knowledge sharing.

-

Version Control: Leveraging a system like Git is essential. I can track every change, easily revert if needed, and facilitate a collaborative approach to test development.

-

Data-Driven Testing: I decouple test data from the scripts themselves, using external files (like Excel or CSV) or even databases. This allows for executing tests with diverse input scenarios, enhancing coverage while simplifying both updates and troubleshooting.

-

Regular Reviews & Refactoring: I don’t treat maintenance as a reactive task. Proactive code reviews let me identify areas for optimization, remove outdated logic, and continuously improve the suite’s efficiency.

Beyond the Technical: I recognize that successful test maintenance involves a strong team approach. I prioritize open communication channels with developers and emphasize shared ownership of the automation suite to ensure it remains a valuable asset as the application evolves.”

“While I’m a strong advocate of automation, I recognize that certain scenarios are better suited for manual testing or a hybrid approach. I made this decision based on several factors:

-

Unstable or Rapidly Changing Features: Automating tests for areas of the application in active flux can be counterproductive. Frequent UI or functionality changes would require constant script updates, creating more maintenance work than value.

-

Exploratory Testing: Tasks requiring human intuition and creativity, like evaluating user interface aesthetics or uncovering unexpected edge cases, are best handled by skilled manual testers.

-

One-Off Tests: If a test only needs to run once or twice, the time spent automating it might not be worth it.

-

Resource Constraints: If I’m working with limited time or a small team, I might prioritize automating high-risk, repetitive tests while carefully selecting other areas where manual testing may be more efficient.

-

Proof of Concept: During early project phases, manual exploration can help define requirements and uncover potential automation use cases.

Crucially, I see this as a dynamic decision. A test initially deemed unsuitable for automation might become a candidate later once the feature stabilizes or the team’s resources expand.”

#5 Tell me about your experience with Selenium?

“I have significant experience working with the Selenium suite, particularly Selenium WebDriver for web application testing. My focus lies in developing robust and maintainable test automation frameworks tailored to specific project needs.

Here are some key aspects of my Selenium proficiency:

- Cross-Browser Compatibility: Ensuring our applications work seamlessly across different browsers is critical. I design my Selenium scripts with this in mind, strategizing for compatibility with Chrome, Firefox, Edge, and others as required.

- Framework Design: I have experience with both keyword-driven and data-driven frameworks using Selenium. I understand the trade-offs between rapid test development and long-term maintainability, selecting the right approach based on project requirements.

- Integration Expertise: I’ve integrated Selenium with tools like TestNG or JUnit for test management and reporting, as well as continuous integration systems like Jenkins for automated test execution.

- Complex Scenarios: I’m comfortable automating a wide range of UI interactions, dynamic elements, and handling challenges like synchronization issues or AJAX-based applications.

Beyond technical skills, my Selenium work has taught me the value of collaborating with developers to make applications testable from the start. I’m always looking for ways to improve efficiency and make our automation suite a key pillar of our quality assurance process.”

Here are some important questions about selenium from an interview aspect

#6 What are the common challenges faced in automation testing, and how do you overcome them?

Common challenges in automation testing include maintaining test scripts, handling dynamic elements, achieving cross-browser compatibility, dealing with complex scenarios, and integrating with Continuous Integration/Continuous Deployment (CI/CD) pipelines.

To get around these problems, you need to use strong automation frameworks like Selenium or Appium, version control systems to keep track of your scripts, dynamic locators and waits to deal with dynamic elements, cloud-based testing platforms for cross-browser compatibility testing, modular and reusable test scripts for tricky situations, and tools like Jenkins or GitLab CI to make automation tests work seamlessly with CI/CD pipelines.

Prioritizing regular maintenance and updates of test scripts and frameworks is essential to ensuring long-term efficiency and effectiveness in automation testing endeavors.

#7 How do you ensure the reliability of automated tests?

Ensuring the reliability of automated tests is paramount in any testing strategy. Here’s how I ensure the reliability of automated tests:

- Prioritize maintaining a stable test environment to minimize variability in test results.

- Our test framework is robust, designed to handle exceptions gracefully and provide clear error reporting.

- Effective management of test data ensures predictable outcomes and reduces false positives.

- Regular maintenance of test scripts and frameworks keeps them aligned with application changes.

- Continuous monitoring allows us to identify and address any issues promptly during test execution.

- Version control systems track changes in test scripts, facilitating collaboration and ensuring code integrity.

- Comprehensive cross-platform testing validates tests across various environments for thorough coverage.

- Code reviews play a vital role in maintaining the quality and reliability of test scripts.

- Thoughtful test case design focuses on verifying specific functionality, reducing flakiness.

- Execution of tests in isolation minimizes dependencies and ensures reproducibility of results.

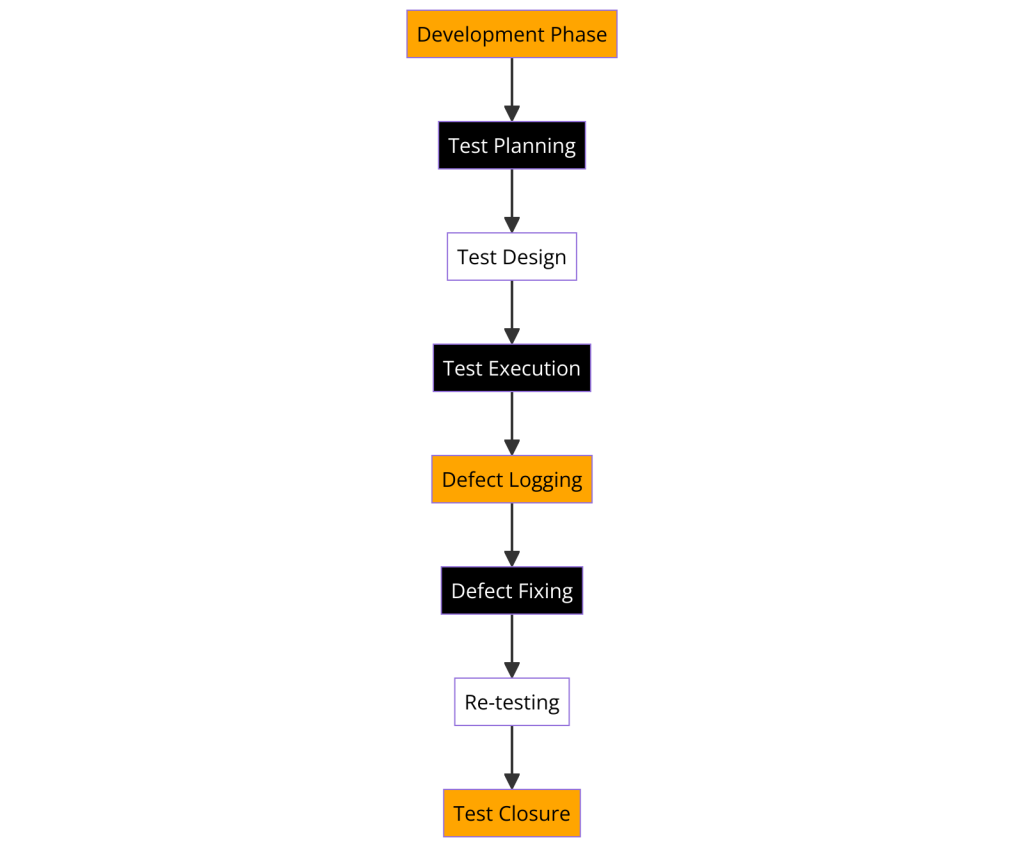

#8 What do you do in the Planning Phase Of Automation?

“My focus during the planning phase is on laying a strong foundation for successful automation. First and foremost, I carefully analyze which test cases would benefit most from automation.

I look for tests that are repeatedly executed, cover critical areas, or involve a lot of data variations. Then, I assess potential tools and frameworks, taking the team’s existing skills and the specific application technology into account.

Alongside that, I’ll consider which type of test framework best suits the project – whether that’s data-driven for extensive datasets, keyword-driven for ease of use, or perhaps a hybrid approach. I’ll then work with the team to establish coding standards for consistency and maintainability.

Importantly, I’m realistic during the scoping and timeline phases. We prioritize the test suites that give us the best return on automation investment, and I set realistic estimates that factor in development, testing, and potential maintenance.

I also think proactively about resources. Are there specific roles the team needs for automation success? Are there training needs we should address early on? Finally, I work to identify any potential bottlenecks and risks, so we have plans in place to mitigate them.

Throughout the planning phase, I believe open communication with all stakeholders is essential. Automation success goes beyond the technical when it aligns with the overall goals of the project.”

#9 Explain the concept of data-driven testing. How do you implement it in your automation framework?

- The Core Idea: At its heart, data-driven testing separates your test logic from the test data. It allows you to run the same test multiple times with different input values, increasing test coverage without multiplying the number of scripts.

- Benefits:

- Efficiency: Execute a wide range of test scenarios with minimal code changes.

- Scalability: Easily expand test coverage as new data sets become available.

- Maintainability: Updates to test data don’t require modifying the core test scripts.

Implementation in an Automation Framework

- Data Source:

- External Files: Commonly used formats include CSV, Excel, or even databases.

- Data Generation: For large or complex data sets, consider coding solutions or tools to generate realistic test data.

- Integration with Test Scripts:

- Data Providers: Use the features offered by testing frameworks (like TestNG or JUnit) to read data from the source of your choice and feed it into tests.

- Parameterization: Parameterize your test methods to accept input values from the data provider.

- Looping: Use loop constructs to iterate through each row of data, executing the test logic with each set of input values.

Example:

Consider testing a login form with multiple username/password combinations. A data-driven approach would involve storing the credentials in an external file and using your framework to read and pass each combination to the test script.

Beyond the Technical: I always consider maintainability when implementing data-driven testing. A well-structured data source and clear separation of data from test logic make it easier for the team to update or expand test scenarios.

#10 There are a few conditions where we cannot use automation testing for Agile methodology. Explain them.

While automation testing offers significant benefits in Agile, there are situations where manual testing remains the preferred approach:

-

Exploratory Testing: Agile methodologies emphasize rapid development and innovation. Exploratory testing, where testers freely explore the application to uncover usability issues or edge cases, is often crucial in early stages. Automation is less suited for this type of open-ended, creative exploration.

-

Highly Volatile Requirements: When project requirements are constantly changing, automating tests can be counterproductive. The time spent creating and maintaining automated scripts might be wasted if core functionalities are frequently revised.

-

Low-Risk Visual Elements: Certain visual aspects, like layout or aesthetics, may not warrant automation. Manual testing allows testers to leverage their human judgment and provide subjective feedback on user experience.

-

Limited Resources: If your team is small or has limited time for automation setup, focusing manual efforts on critical functionalities might be a better use of resources. Invest in automation when it demonstrates a clear ROI for your specific project.

-

Proof-of-Concept Stages: During initial development phases, manual testing helps gather valuable insights to inform automation decisions later. Once core functionalities solidify, you can identify the most valuable test cases for automation.

#11. What are the most common types of testing you would automate?

“I focus on strategically automating tests that offer the highest return on investment within our development process. Here are the primary categories I prioritize:

-

Regression Testing: Every code change has the potential to break existing functionality. A comprehensive automated regression suite provides a safety net, allowing us to confidently make changes and deploy updates frequently.

-

Smoke Testing: Automating a suite of basic sanity tests ensures core functionalities are working as expected after each build. This provides rapid feedback, saving time and preventing critical defects from slipping through.

-

Data-Driven Tests: Scenarios requiring numerous input combinations, like login forms, calculations, or boundary-value testing, are ideal for automation. It allows extensive coverage with minimal script duplication.

-

Cross-Browser & Cross-Device Tests: Ensuring our application works as intended across a range of browsers and devices is often tedious and time-consuming to do manually. Automation makes this testing streamlined and efficient.

-

Performance and Load Tests: While some setup is required, automating performance tests allows us to simulate realistic user loads and identify bottlenecks early on. This is crucial for ensuring the application scales effectively.

#12 Tell a few risks associated with automation testing?

Some of the common risks are:

-

-

- One of the major risks associated with automation testing is finding skilled testers. The testers should have good knowledge of various automation tools, a knowledge of programming languages, be technologically sound, and be able to adapt to new technology.

- The initial cost of automation testing is higher, and convincing the client for this coat can be a tedious job.

- Automation testing with an unfixed UI, and constantly changing UI can be a risky task.

- Automating an unstable system can be risky too. In such scenarios, the cost of script maintenance is very high.

- If there are some test cases to be executed once, it is not a good idea to automate them.

#13 Explain the tree view in automation testing?

Understanding Tree Views

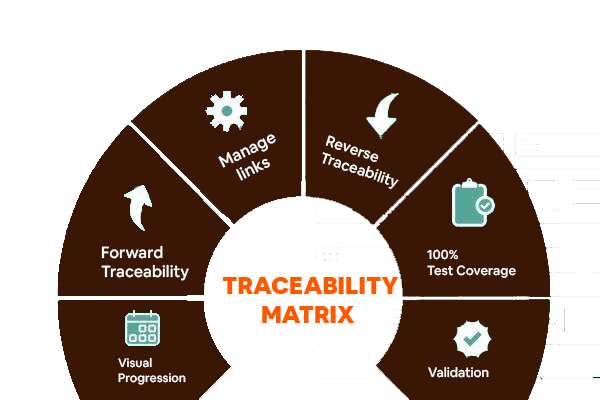

In the context of automation testing, a tree view represents the hierarchical structure of elements in a web page or application interface. Each node in the tree represents a UI element, and its branches depict the parent-child relationships between elements.

Why Tree Views are Important in Automation

- Unique Identification: Tree views help automation scripts accurately identify elements, especially when those elements lack distinctive attributes like fixed IDs. Testers can traverse the tree structure using parent-child relationships to pinpoint their target.

- Dynamic UI Handling: If the application’s interface changes, using a tree view can make test scripts more resilient. Adjusting paths within the tree might be sufficient, rather than completely overhauling object locators.

- Test Case Visualization: Tree views can present test steps in a logical format that reflects the way users interact with the interface.

Example of How It’s Used

Imagine a test to verify the “Contact” link. An automation script could use the tree structure:

- Locate the top-level “Website” element.

- Find the “Contact” child element within the structure.

- Click the “Contact” element

Automation Tool Support

Many testing tools have built-in features to interact with tree views:

- Selenium WebDriver: Provides methods to locate elements by traversing the tree structure (using XPath or other strategies).

- Appium: Supports tree view concepts for mobile app testing.

- UI Automation Frameworks: Often have libraries for easy tree view manipulation.

#14 Your company has decided to implement new automation tools based on the current requirement. what features will you look out for in an Automation Tool?

“Choosing the right automation tool is a strategic decision that goes beyond ticking off a list of features. I focus on finding a tool that aligns with our project’s unique requirements, as well as long-term team needs. Here’s the framework I use for evaluation:

-

Technology Fit: The primary consideration is whether the tool supports our application’s technology stack. Can it test our web frontend, backend APIs, and mobile components effectively?

-

Ease of Use & Learning Curve: The tool’s usability impacts adoption and maintainability. I assess if it suits our team’s skillset. Do we need extensive coding experience, or are there features for less technical testers to create scripts?

-

Framework Flexibility: Will the tool allow us to build the type of framework we envision? Does it support data-driven, keyword-driven, or hybrid models? Can we customize it to our needs?

-

Test Reporting & Integration: I look for tools with clear reporting capabilities that integrate seamlessly with our CI/CD pipeline and defect tracking systems. This ensures test results provide actionable insights.

-

Scalability: Will the tool grow with our application and test suite? Can it handle increasing test volumes and complex scenarios?

-

Community & Support: An active community and available documentation ensure we have resources to access if we encounter challenges. For commercial tools, I evaluate their support offerings.

-

Cost-Benefit Analysis: I consider both the initial cost and the ongoing maintenance. Open-source tools might require development investment, while commercial ones may involve licensing fees.

Importantly, I involve key stakeholders in the decision-making process. Collaboration between testers and developers ensures we select a tool that empowers the entire team.”

#15 Tell a few disadvantages of Automation Testing?

Some of the disadvantages of automation testing are:

-

-

- Tool designing requires a lot of manual efforts

- tools can be buggy, inefficient, costly,

- Tools can have technological limitations.

#16. Are there any Prerequisites of Automation Testing? If so, what are they?

“Yes, successful automation testing relies on several key prerequisites:

-

Stable Application Under Test (AUT): Automating tests for an application in constant flux leads to scripts requiring frequent updates, undermining the investment. A degree of feature maturity is essential.

-

Clearly Defined Test Cases: Automation isn’t about replacing test design. Knowing precisely what you want to test and the expected outcomes is crucial for creating effective scripts.

-

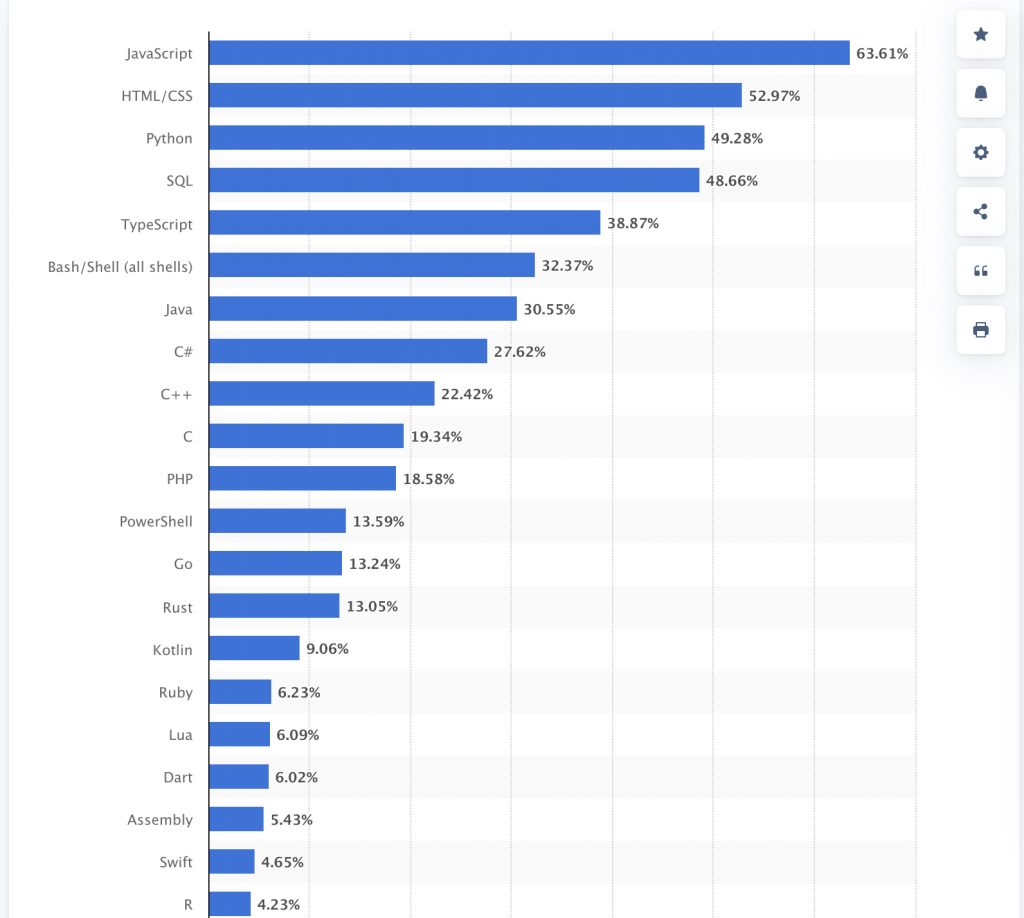

Programming Proficiency: While there’s increasing accessibility in automation tools, an understanding of coding concepts and at least one scripting language is fundamental for developing flexible and maintainable tests.

-

Well-Structured Test Environment: Consistent test environments (operating systems, browsers, etc.) promote reliable test execution and minimize false positives caused by environmental factors.

-

Commitment to Maintenance: Automated test suites aren’t self-sustaining. There must be a plan for updating scripts and troubleshooting as the application evolves.

-

Realistic Expectations: Automation isn’t a magic wand. Understanding its strengths and limitations helps set realistic goals and timelines for implementation.

Importantly, I view the decision to automate as a calculated one. I evaluate the current state of the project against these prerequisites to ensure we’re setting up our automation efforts for success.”

#17 Types of automation frameworks you have worked with?

“Throughout my career, I’ve had the opportunity to work with a diverse range of automation frameworks, tailoring my selections to best suit the unique needs of each project. Here’s a breakdown of the primary types I have experience with:

-

Data-Driven Frameworks: I have a strong understanding of how to design frameworks that separate test data from test logic. This has been invaluable when dealing with applications featuring extensive data combinations, input variations, or scenarios requiring extensive validation. I’m adept at sourcing data from external files (Excel, CSV) or integrating with databases.

-

Keyword-Driven Frameworks: I value the ease of maintainability and readability that keyword-driven frameworks offer. I’ve developed these frameworks to enable less technical team members to contribute to test automation efforts, abstracting the underlying complexities of the code.

-

Hybrid Frameworks: Often, the most effective solutions lie in a blend of approaches. I’ve built robust hybrid frameworks that leverage the strengths of both data-driven and keyword-driven models, maximizing reusability and scalability.

-

Behavior-Driven Development (BDD): For projects where close collaboration between business stakeholders and testers was crucial, I’ve employed BDD frameworks (like Cucumber). This has enabled better communication through defining scenarios in a natural language format.

Beyond specific types, I always emphasize creating frameworks with modularity and maintainability in mind. I’m also comfortable integrating automation frameworks with continuous integration systems like Jenkins for streamlined execution.

Crucially, I don’t adhere to a one-size-fits-all mentality. My selection of a framework is driven by factors like test complexity, team skills, technology compatibility, and the project’s overall quality goals.”

#18 State the difference between Open Source Tools, Vendor Tools, And In-house Tools?

The difference between Open Source Tools, Vendor Tools, and In-house Tools are:

- Open source tools are free-to-use tools, their source code is available for free online for others to use.

- Vendor tools can also be referred to as companies developed tools. You will have to purchase their licenses for using them. These tools come with proper technical support for sorting any kind of technical issue. Some of the vendor tools are WinRunner, SilkTest, LR, QA Director, QTP, Rational Robot, QC, RFT, and RPT.

- In-house tools are custom-made by companies for their personal use.

#19 What are the mapping criteria for successful automation testing?

“I believe successful automation testing hinges on identifying the areas where it will deliver the most significant value. I consider the following mapping criteria:

-

Test Case Characteristics: [Existing points on Repetition, Stability, Risk, Complexity]

-

Application Under Test (AUT): [Existing points on Testability, Technology Stack]

-

Return on Investment (ROI):

- Defect Detection Ratio: How effective are automated tests at finding bugs compared to manual testing within a specific timeframe? A higher ratio demonstrates value.

- Automation Execution Time: How quickly does the automated suite run compared to manual execution? This directly translates to saved time.

- Time Saved for Product Release: If automation speeds up testing cycles, can we deploy features or updates sooner? This can offer a competitive advantage.

- Reduced Labor Costs: While there’s an upfront investment, does automation lessen the need for manual testers over the project’s lifespan?

- Overall Cost Decrease: Do reduced labor needs, bug prevention, and faster release cycles result in tangible cost savings in the long run?

-

Team & Resources: [Existing points on Skillset, Time Investment]

-

Project Context: [Existing points on Agile Fit, Criticality]

Importantly, I view this mapping process as dynamic. Re-evaluating these criteria, alongside the additional ROI metrics, throughout the project lifecycle ensures automation continuously delivers on its intended value.”

Why this is even stronger:

- Measurable Success: These additions show you consider quantifiable outcomes, not just vague benefits.

- Business Alignment: Speaking to time-to-market and cost savings resonates with stakeholders outside of pure testing.

- Focus on the Long Game: It positions you as someone thinking about automation as a strategic investment.

Separate Discussion Option:

If the interviewer asks specifically about these ROI metrics, provide this core answer first. Then, elaborate on each metric with examples of how you’ve tracked them in previous projects to show real-world results.

#20 What is the role of version control systems (e.g., Git) in automation testing?

“Version control systems like Git offer several key benefits that make them essential to efficient and reliable automation testing:

-

Collaboration: Git enables seamless collaboration among testers and developers working on the test suite. It facilitates easy code sharing, conflict resolution, and parallel development.

-

Tracking Changes & Rollbacks: Git meticulously tracks every change to automation scripts, including who made the change and when. If a new test script introduces issues, it’s simple to roll back to a previous, known-good version.

-

Branching for Experimentation: Git’s branching model allows teams to experiment with new test scenarios or major updates without disrupting the main suite. This fosters innovation and safe parallel testing.

-

Test Environment Alignment: Git can version control configuration files related to test environments. This ensures that the right automated tests are linked to their correct environment configurations, minimizing discrepancies.

-

Historical Record: Git maintains a complete history of the automation suite. This aids in understanding testing trends, analyzing how test coverage has evolved, and even pinpointing the code change that might have introduced a regression.

-

Integration with CI/CD Pipelines: Git integrates seamlessly with continuous integration systems. Any code changes to the test suite can automatically trigger test runs, providing rapid feedback and accelerating the development process.

#21 What are the essential Types of Test steps in automation?

Core Step Types

- Navigation: Automated steps to open URLs, interact with browser buttons (back, forward), and manipulate UI elements for traversal.

- Input: Entering data into fields, selecting from dropdown lists, checkboxes, radio buttons, and handling various input methods.

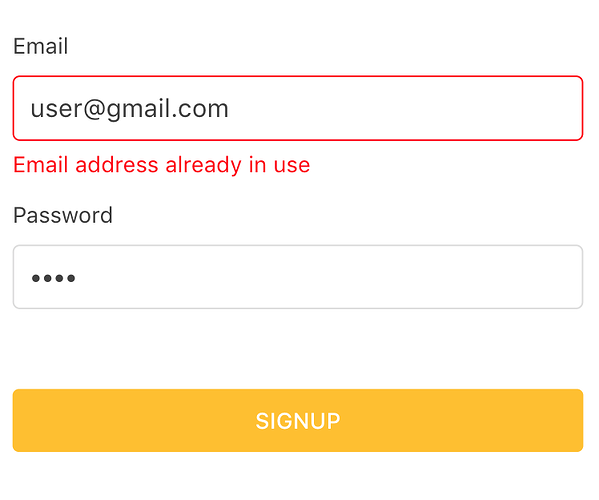

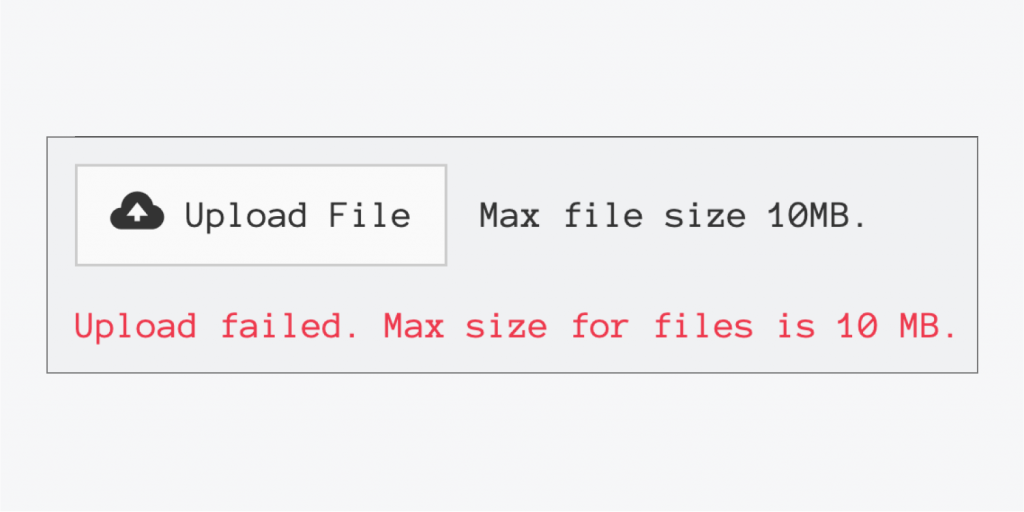

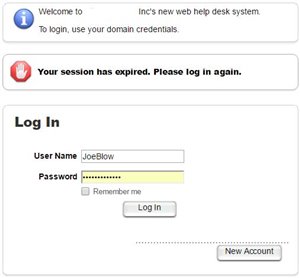

- Verification/Assertions: Central to automation, these steps verify that the actual outcome of a test action matches the expected result. They can range from simple element visibility checks to complex data validations.

- Synchronization: Steps that introduce waits (implicit, explicit) or conditional checks to ensure the test script execution aligns with the pace of the application under test, preventing premature failures.

- Test Setup & Teardown: Pre-test actions like logging in, creating test data, and post-test steps like clearing data, closing browsers, etc. These maintain a clean state for each test.

Beyond the Basics

- Conditional Logic: Implementing ‘if-then-else’ logic or loops allows for different test execution paths based on data or application state.

- Data Manipulation: Steps that involve reading data from external sources (files, databases), transforming, or generating test data on the fly.

- API Calls: Interacting with backend APIs to directly test functionality, set up test conditions, or validate responses.

- Reporting: While not a direct test action, automated reporting steps are crucial for logging results, generating dashboards, and integrating with test management tools.

In an Interview Context

You have to emphasize that knowing these step types is the foundation. the focus, however, lies in strategically combining them to model complex user flows and create test scenarios that deliver maximum value in the context of the application being tested.

#22 How do you handle test data management in automation testing? ang from where do you prefer the data from?

“I believe effective test data management is crucial for robust and maintainable automation suites. Here’s my approach:

1. Data Separation: I firmly advocate for decoupling test data from test scripts. This improves maintainability by allowing data updates without modifying code and enables executing a single test with multiple data sets.

2. Data Sourcing Strategies: I select the best sourcing approach based on the project needs:

- External Files: For diverse or frequently changing data, I use Excel, CSV, or JSON files. These are easy to manage, share with non-technical stakeholders, and integrate with frameworks.

- Test Data Generators: When large or complex datasets are needed, I explore coding solutions or dedicated libraries for generating realistic synthetic data on the fly.

- Databases: For applications heavily reliant on database interactions, I might query a test database directly. This facilitates integrated testing of data flows.

- Hybrid Approach: Combining these methods often provides the most flexible solution.

3. Test Data Handling in Code:

- Data Providers: I leverage data providers within testing frameworks (e.g., TestNG, JUnit) to feed data seamlessly into test methods.

- Parameterization: I parameterize test methods to dynamically accept data from my chosen source, enabling data-driven execution.

- Secure Storage: For sensitive test data, I ensure encryption and adherence to best practices for data protection.

Beyond the Technical

- Collaboration: I involve developers and potentially database admins to ensure the test data aligns with real-world scenarios and can be easily provisioned in test environments.

- Maintainability: My data storage and retrieval methods prioritize readability and ease of updates, as test data requirements evolve alongside the application.

#23 Explain the importance of reporting and logging in automation testing.

“Reporting and logging are the backbone of effective automation testing for several reasons:

-

Visibility & Transparency: Detailed test reports provide clear insights into the health of the application under test. They communicate the number of tests run, pass/fail rates, execution times, and often include error logs or screenshots for quick issue diagnosis.

-

Troubleshooting & Analysis: Comprehensive logs enable developers and testers to pinpoint the root cause of failures. Detailed logs might record input data, element locators, and step-by-step actions taken by the test, allowing for efficient debugging.

-

Historical Trends: Test reports over time offer valuable historical context. They can help identify recurring problem areas, measure automation coverage improvements, and demonstrate the overall effectiveness of quality assurance efforts.

-

Stakeholder Communication: Well-structured reports are an essential communication tool for non-technical stakeholders. They provide a high-level overview of quality metrics, helping to inform project decisions and build trust.

-

Process Improvement: Analyzing reports and logs can reveal inefficiencies in the testing process itself. Perhaps certain types of tests are prone to flakiness, or excessive execution time points to areas where optimization is needed.

-

Integration with CI/CD: Automation thrives when integrated into continuous integration pipelines. Clear test reporting becomes essential for making informed go/no-go decisions for deployment.

In Practice:

I prioritize designing reports and logs that are informative, well-structured, and tailored to the stakeholder. A mix of high-level summaries and granular detail allows for different uses of the results.

Importantly, reports and logs are not just about recording results – they are powerful tools for driving continuous improvement of both the product and the testing process itself.”

#24 What are the best practices for maintaining automation test scripts?

Key Strategies

-

Modularization: I break down scripts into smaller, reusable functions or components. This promotes code readability, isolates changes, and minimizes the ripple effects of updates.

-

Page Object Model (POM): The POM is a cornerstone of maintainability. Encapsulating UI element locators separately from test logic makes scripts exceptionally resistant to application interface changes.

-

Clear Naming & Comments: Descriptive names for variables, functions, and tests, along with concise comments, make the code self-documenting. This is vital for quick understanding, especially in collaborative settings.

-

Version Control: A system like Git is essential. I track changes, enabling rollbacks if necessary, and facilitate team contributions to the test suite.

-

Data-Driven Approach: I separate test data from test logic using external files (e.g., Excel, CSV) or databases. This allows for updating data and running diverse scenarios without touching the core scripts.

-

Regular Reviews & Refactoring: Maintenance shouldn’t be purely reactive. Proactive code reviews help me identify areas for improvement, remove redundancies, and continuously enhance the script’s efficiency and readability.

Beyond the Technical

-

Test Design: Well-designed test cases from the outset reduce the need for frequent changes. I focus on creating clear, atomic tests targeting specific functionalities.

-

Team Communication: I promote collaboration between testers and developers, ensuring that test scripts remain aligned with the evolving application architecture and that any ‘testability’ concerns are addressed early.

Emphasizing ROI

I recognize that test maintenance is an investment. Regularly assessing the benefits of automation against the maintenance costs ensures that the suite remains a valuable asset, not a burden.

Why this works in an interview

- Not just a list: Provides explanations alongside practices, showing deeper understanding.

- Considers the Long-Term: Acknowledges that maintenance is about more than fixing broken things.

- Focus on Collaboration: Shows you understand testing’s wider impact on the development team.

#25 Describe a few drawbacks of Selenium Ide?

While Selenium IDE remains a valuable tool for getting started with automation, it’s important to be aware of its limitations in 2024, especially for large-scale or complex testing scenarios. Here’s my breakdown:

Key Drawbacks

- Browser Limitations: Primarily designed for Firefox and Chrome, Selenium IDE’s support for other browsers can be inconsistent. In the era of cross-browser compatibility, this necessitates additional tools or workarounds.

- Limited Programming Constructs: Selenium IDE’s record-and-playback core can make it challenging to implement complex logic like conditional statements, loops, or robust data handling.

- Test Data Management: It lacks built-in features for extensive data-driven testing. Integrating external data sources or creating dynamic test data can be cumbersome.

- Error Handling: Debugging and error reporting can be basic, making it harder to pinpoint the root cause of issues in intricate test suites.

- Test Framework Integration: Selenium IDE doesn’t natively integrate with advanced testing frameworks like TestNG or JUnit, limiting its use in well-structured, large-scale projects.

- Scalability: While suitable for smaller test suites, Selenium IDE becomes less manageable as test projects grow, leading to maintainability challenges.

- Object Identification: Can struggle with dynamically changing elements or complex web applications, requiring manual intervention to update locators.

When Selenium IDE Remains Useful (in 2024)

- Rapid Prototyping: Ideal for quickly creating simple tests to verify basic functionality.

- Exploratory Testing Aid: Can help map out elements and potential test flows before building more robust scripts in frameworks like Selenium WebDriver.

- Accessibility: Its lower technical barrier of entry makes it a starting point for those less familiar with coding.

Key Takeaway

Selenium IDE is a helpful entry point into automation, but for robust, scalable testing in 2024, transitioning to frameworks like Selenium WebDriver, paired with a programming language, becomes essential. These offer more flexibility, language support, and integration capabilities for complex real-world testing needs.

#26 Name the different scripting techniques for automation testing?

Core Techniques

-

Linear Scripting (Record and Playback): The most basic technique, where user interactions are recorded and then played back verbatim. While simple to get started with, it often results in inflexible and difficult-to-maintain scripts.

-

Structured Scripting: Introduces programming concepts like conditional statements (if-else), loops (for, while), and variables. This enables more adaptable tests and basic data-driven execution.

-

Data-Driven Scripting: Separates test logic from test data. Data is stored in external sources (like spreadsheets, CSV files, or databases) and dynamically fed into tests, allowing for a single test to be executed with multiple input sets.

-

Keyword-Driven Scripting: Builds a layer of abstraction through keywords that represent high-level actions. This makes tests readable for even non-technical team members, but requires more up-front planning and implementation.

-

Hybrid Scripting: Combines the strengths of various techniques to achieve a balance of maintainability, data-driven flexibility, and ease of understanding.

Beyond the Basics

-

Behavior-Driven Development (BDD): Uses a natural language syntax (like Gherkin) to define test scenarios, fostering collaboration between business analysts, developers, and testers.

-

Model-Based Testing (MBT): Employs models to represent application behavior. These models can automatically generate test cases, potentially reducing manual test design efforts.

Choosing the Right Technique

In an interview, you should emphasize that there’s no single “best” technique. The selection depends on factors like:

- Team Skillset: The complexity of the technique should match the team’s technical abilities.

- Application Complexity: Simple applications might suffice with linear scripting, while complex ones benefit from more structured approaches.

- Test Case Nature: Data-driven testing is ideal for scenarios with multiple input variations.

- Collaboration Needs: BDD or keyword-driven approaches enhance communication with stakeholders.

#27 How do you select test cases for automation?

Key Selection Criteria

-

Repetition: Tests that need to be executed frequently across multiple builds, regressions, or configurations are prime candidates for automation.

-

Risk: Automating test cases covering critical, high-risk areas of the application provides a valuable safety net against failures in production.

-

Complexity: Time-consuming or error-prone manual tests often gain significant efficiency and accuracy when automated.

-

Stability: Mature features with minimal UI changes are less likely to cause script maintenance overhead compared to highly volatile areas.

-

Data-Driven Potential: Test cases involving multiple data sets or complex input combinations are ideally suited for automation with data-driven approaches.

-

Testability: Consider whether the application is designed with automation in mind – are elements easily identifiable, and are there ways to interact programmatically with its components?

Prioritization & Evaluation

Explain that you don’t view automation as a “one size fits all” solution. instead you would,

-

Start with High-Impact Tests: Initially, focus on automating those test cases offering immediate and significant returns on time and effort invested.

-

Continuous Evaluation: Review test suites regularly with stakeholders to identify evolving automation opportunities and ensure existing scripts are providing value.

-

Hybrid Approach: Recognize that a combination of manual and automated testing is often the most effective strategy, especially in dynamic projects.

-

ROI Analysis: Consider development time, maintenance effort, and the potential savings in manual testing when estimating the return on investment (ROI) of automating each test case.

Emphasizing a Strategic Mindset:

In an interview, I’d stress that my goal is to maximize the efficiency and effectiveness of our quality assurance efforts through automation. I make calculated decisions based on a balance of technical suitability and potential benefits to the project.

#28 What would be your criteria for picking up the automation tool for your specific scenarios?

- Technology Fit: Does the tool support the web, mobile, or API technologies I’m testing?

- Ease of Use: Is it suitable for my team’s skillset, promoting adoption and maintainability?

- Framework Flexibility: Can I create my desired test framework type (data-driven, keyword-driven, etc.)?

- Scalability: Will the tool grow with my project’s increasing complexity and test suite size?

- Reporting & Integrations: Does it integrate with CI/CD pipelines and provide the reporting my team needs?

- Community & Support: Are there resources and documentation for troubleshooting, especially for commercial tools?

- Cost-Benefit Analysis: Does the initial investment and ongoing maintenance align with the expected ROI for my project?

#29 Can automation testing completely replace manual testing?

No, automation testing cannot fully replace manual testing. Each has its unique strengths. Here’s why a balanced approach is essential:

- Automation Excels: Repetitive tasks, regressions, smoke tests, data-driven scenarios, and performance testing are prime automation targets.

- Humans are Essential: Exploratory testing, usability evaluation, complex scenarios needing intuition, and edge case discovery require the human touch.

- Strategic Combination: The most effective quality assurance leverages automation for predictable, repetitive tasks while freeing up skilled manual testers for high-value, creative testing.

In short, I view automation and manual testing as complementary tools, maximizing the value of our testing efforts.

#30 Describe the role of automation testing in the context of Agile and DevOps methodologies.

Automation as a Key Enabler

- Continuous Testing in Agile: In Agile’s rapid iterations, automation enables frequent testing without sacrificing development speed. Automated regression suites offer a safety net as changes are introduced.

- Shift-Left Testing in DevOps: Automation allows testing to begin earlier in the development lifecycle. Testers can write automated unit or API tests alongside developers, catching issues before they reach later, costlier stages.

- Accelerating Feedback Loops: Automated test suites, integrated into CI/CD pipelines, provide immediate feedback to developers upon code changes. This fosters collaboration and shortens bug fix times.

- Confidence in Deployments: Comprehensive automated smoke tests and key functional tests executed after deployment give teams confidence in pushing updates quickly and frequently.

- Quality at Scale: As applications grow, automated checks ensure that new features don’t inadvertently cause issues elsewhere, maintaining quality in a complex environment.

Beyond the Technical

Automation in Agile/DevOps demands:

- Testers as Developers: A shift in mindset towards integrating automation into the development process and a willingness to collaborate closely with the entire team.

- Tooling Expertise: Selecting and integrating the right automation tools into existing pipelines is essential.

Why this works in an interview

- Doesn’t just list benefits: Explains how automation aligns with the core philosophies of Agile and DevOps.

- Shows Big Picture Thinking: Highlights the impact of automation on the workflow, not just individual tests.

- Adaptability: Recognizes that automation success in Agile/DevOps requires a changing mindset.

#31 Which types of test cases will you not automate?

-

Exploratory Tests Requiring Intuition: Tests involving creative problem-solving, user experience evaluation, or uncovering edge cases based on a “gut feeling” are best tackled by skilled manual testers.

-

Tests with Unstable Requirements: Frequently changing functionalities aren’t ideal for automation, as maintaining the scripts could negate the time savings.

-

One-Off or Infrequent Tests: If a test is unlikely to be repeated, the investment in automation might outweigh the benefits.

-

Visually-Oriented Tests: While some image-based automation exists, for tasks like verifying intricate UI layout or visual aesthetics, manual testing often delivers results more effectively.

-

Tests with Unreliable Infrastructure: If flaky test environments or external dependencies cause unpredictable results, automation can lead to false positives, eroding trust in the suite.

Important Considerations:

- Project Context Matters: A test deemed unsuitable for automation in one project might be a good candidate in another with different constraints.

- The Decision is Fluid: As the application matures, or if tools and team skills evolve, some initially manual tests might become prime targets for automation.

- Collaboration is Key: I always discuss these trade-offs with developers and stakeholders to align testing strategy with overall project goals.

#32 Can you discuss the role of exploratory testing in conjunction with automation testing?

Exploratory Testing

- Human Intuition: Leverages a tester’s creativity, experience, and domain knowledge to discover unexpected behaviors and edge cases that automated scripts might miss.

- Adaptability: Excels in areas where requirements are fluid, the application is undergoing rapid change, or investigating a specific issue.

- Discovery: Uncovers hidden bugs, usability problems, and potential areas for future automation.

Automation Testing

- Efficiency: Runs regression suites and repetitive tests with high speed and consistency.

- Scalability: Handles large-scale test scenarios more efficiently than manual efforts could.

- Reliability: Ensures core functionality remains intact across frequent code changes.

The Complementary Relationship

- Not a Replacement: Exploratory testing doesn’t replace automation; they work best hand-in-hand.

- Finding the Balance: Projects should find a balance between exploratory and automated testing based on the development lifecycle stage and risk areas.

- Guiding Automation: Results from exploratory tests provide valuable insights to drive the creation of new, targeted automated test cases.

- Long-Term Quality: Iteratively combining the two approaches ensures a well-rounded, efficient, and adaptive testing strategy that boosts overall software quality.

In an interview, You’d also have to highlight:

- My personal experience: I could give examples of when I’ve used exploratory testing to effectively uncover problems that led to improvements in automated suites.

#33 Describe your plan for automation testing of e-commerce web applications, focusing on the checkout process and inventory management features.

Understanding the Focus Areas

“First, I want to ensure I’m crystal clear on the key functionalities we’re targeting. For the checkout process, that means ensuring a smooth, secure, and accurate experience for the customer. Testing must cover everything from adding items to the cart, all the way through applying discounts, processing payments, and confirming the order.

For inventory management, my primary goal is to ensure total synchronization between the website’s displayed stock and the actual inventory system. Are there any specific pain points or known areas of concern within either of these that I should be especially aware of?”

Test Strategy and Approach

“Given the critical nature of these features, I’d recommend a mixture of scripted and exploratory testing.

- Scripted Automation: I’d prioritize building a core suite of automated tests using a tool like Selenium WebDriver. This would cover the fundamental checkout flows with different test data to simulate various customer scenarios, payment options, and potential errors.

- Exploratory Testing: This is especially important for the user experience side of checkout. I’d want to spend time putting myself in the customer’s shoes to proactively try and discover usability issues or unclear messaging that could cause frustration.

For inventory, I’d likely use an API testing tool alongside my UI tests. This allows me to directly query the inventory system and ensure immediate updates and accurate stock levels are reflected on the frontend.”

Collaboration and Continuous Improvement

“Strong communication with both development and business stakeholders is key in this area. I want to understand any past issues with payment gateways, inventory discrepancies, or user complaints that can help refine my test cases. Ideally, my automated tests would be integrated into the CI/CD pipeline to provide rapid feedback after each code change.”

Irrespective of the choice of your automation tools like SilkTest, QTP, Selenium or any other test tool you can follow the following rules

#34 How do you ensure test coverage across various user personas or roles in your automation testing?

1. Identifying User Personas

- Collaboration: I’d work closely with stakeholders (product owners, marketing, UX) to define distinct user personas based on their goals, behaviors, and technical expertise. It’s crucial to go beyond basic demographics.

- Examples: A persona might be a “casual shopper” who primarily browses, a “coupon-savvy customer” focused on deals, or an “administrator” managing inventory.

2. Role-Specific Test Scenarios

- Targeted Flows: For each persona, I’d map out their typical journeys through the application. An admin wouldn’t need a full checkout test, while a casual shopper might require usability tests emphasizing search and navigation.

- Permissions: If the system has role-based access, I’d carefully design tests to validate both allowed actions and ensure restricted actions are correctly blocked for each persona.

- Data-Driven Approach: Use data sets with information tailored to each persona (e.g., preferred payment methods, shipping addresses) to make tests more realistic.

3. Test Suite Organization

- Modularization: Create reusable code blocks for actions common to multiple personas (login, search, etc.). This aids maintainability and makes persona-specific variations easier.

- Clear Labeling or Tagging: Tagging tests by persona allows easy filtering and execution of targeted test suites as needed.

4. Prioritization and Expansion

- Critical First: Focus on the personas driving core business functions. A smooth experience for the typical buyer is often paramount.

- Ongoing Collaboration: Stay in touch with the team regarding any changes to user profiles or the introduction of new roles, necessitating test suite updates.

Interview Emphasis

- Proactivity: you have to stress that persona consideration should start early, during test design, not as an afterthought.

- Real-World Examples: mention cases where role-based testing uncovered unexpected issues or guided prioritization.

35. What are the key differences between scripted and scriptless automation testing approaches?

Scripted Testing

- Coding-Centric: Requires testers to have programming expertise (Java, Python, etc.) to write detailed test scripts that dictate every action and expected result.

- Flexibility: Offers immense customization for complex test scenarios, fine-grained control, and integration with external tools.

- Maintenance: Can be time-consuming to maintain as application updates often necessitate changes to the underlying test scripts.

Scriptless Testing

- Visual Interface: Leverages visual modeling, drag-and-drop elements, or keyword-driven interfaces for test creation. Testers don’t need traditional coding skills.

- Accessibility: Enables non-technical team members (business analysts, domain experts) to participate in testing.

- Faster Initial Setup: Test cases can often be built more quickly in the beginning compared to scripted approaches.

- Potential Limitations: Might be less adaptable for highly intricate test scenarios or custom integrations compared to the full flexibility of scripted testing.

In an interview,you’d have to further emphasize:

- Context Matters: The best approach depends on the project’s complexity, the team’s skillsets, and the desired speed vs. long-term maintainability balance.

- Hybrid Solutions: Many projects benefit from a mix of scripted and scriptless techniques to leverage the strengths of both.

Also Read:- Selenium expert waiting for that dream job interview?

#36 Describe a situation where you had to automate API testing. What tools and techniques did you use?

The automation framework is a software platform that provides the needed structure and echo system to automate and run test cases. They are also a set of rules for users for efficient automation testing.

Some of the rules are:

-

-

- Rules for writing test cases.

- Coding rules for developing test handlers.

- Prototype for Input test data.

- Management of Object repository.

- Log configuration.

- Usage of Test results and reporting.

#37 State a few coding practices to follow during automation?

1. Maintainability

- Modularity: Break code into reusable functions or components that perform specific tasks. This improves readability and makes updates easier.

- Meaningful Naming: Use descriptive variable, function, and test case names that clearly convey their purpose.

- Comments & Documentation: Explain complex logic (but don’t overcomment obvious code). Document the overall purpose of test suites.

2. Reliability

- Robust Error Handling: Implement graceful error handling to prevent test scripts from failing unexpectedly. Log errors for analysis.

- Independent Tests: Avoid tests that depend on the results of others. This isolates failures and makes debugging easier.

- Data Isolation: Use unique test data sets where possible to prevent conflicts or side effects within the test environment.

3. Efficiency

- Test Design: Plan tests to minimize unnecessary steps and focus on the most critical scenarios.

- Object Repositories: Store UI element locators (i.e., IDs, XPaths) centrally to improve maintainability and reduce the impact of application UI changes.

- Waiting Strategies: Implement intelligent waits (explicit, implicit) instead of arbitrary sleep timers to keep tests running smoothly.

4. Collaboration

- Version Control: Use a system like Git for tracking changes, enabling rollback, and facilitating team collaboration.

- Coding Standards: Adhere to team- or industry-standard coding conventions for consistency and ease of understanding.

- Peer Reviews: Have other team members review your automation code for clarity and potential improvements.

Interview Emphasis

- Adaptability: You have to mention that the ideal practices can evolve with the project’s complexity and team structure.

- Tradeoffs: Also, you need to acknowledge the situations where a slight compromise in maintainability could be acceptable for quick, exploratory test creation.

#38 State the scripting standard for automation testing?

- Language-Specific Conventions: Follow the recommended style guides and best practices for your chosen programming language.

- Design Patterns: Leverage patterns like Page Object Model (POM) and Data-Driven Testing for structure and flexibility.

- Framework Best Practices: Adhere to your chosen testing framework’s recommended practices for organization and reporting.

- Readability & Maintainability: Emphasize clear naming conventions, modular code, and meaningful comments.

#39 How do you handle security testing aspects, such as vulnerability scanning, in automated test suites?

1. Tool Selection

- Specialized Security Scanners: Tools like OWASP ZAP, Burp Suite, or commercial alternatives offer dedicated vulnerability scanning features

- Integration Capabilities: The ideal tool should integrate with your testing framework and CI/CD pipeline for automated execution.

2. Test Case Design

- Targeted Scans: Focus on high-risk areas of the application (login forms, payment sections, areas handling sensitive data).

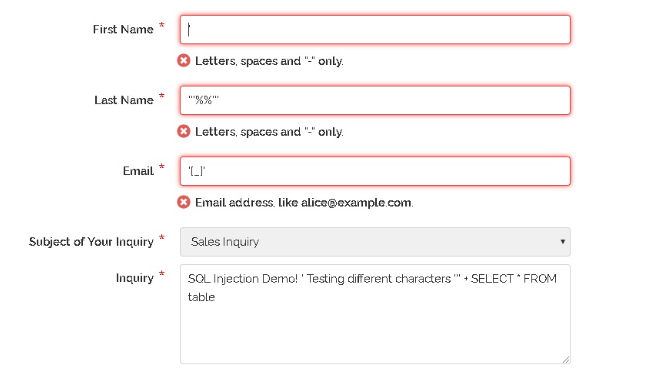

- Common Vulnerabilities: Prioritize tests covering OWASP Top 10 (SQL injection, XSS, etc.).

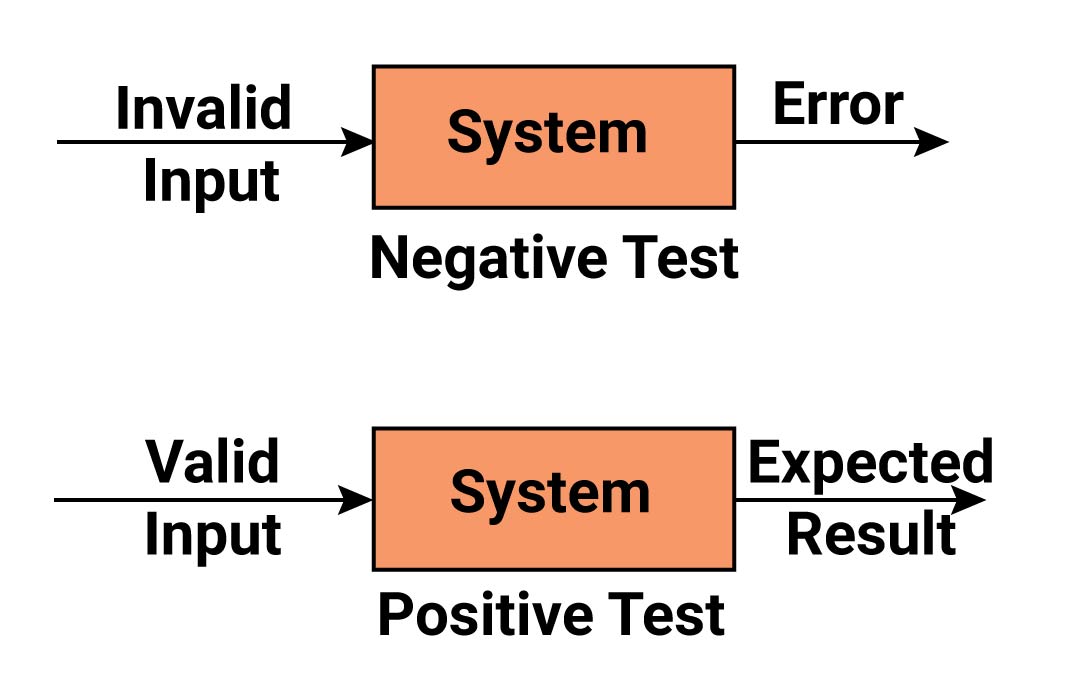

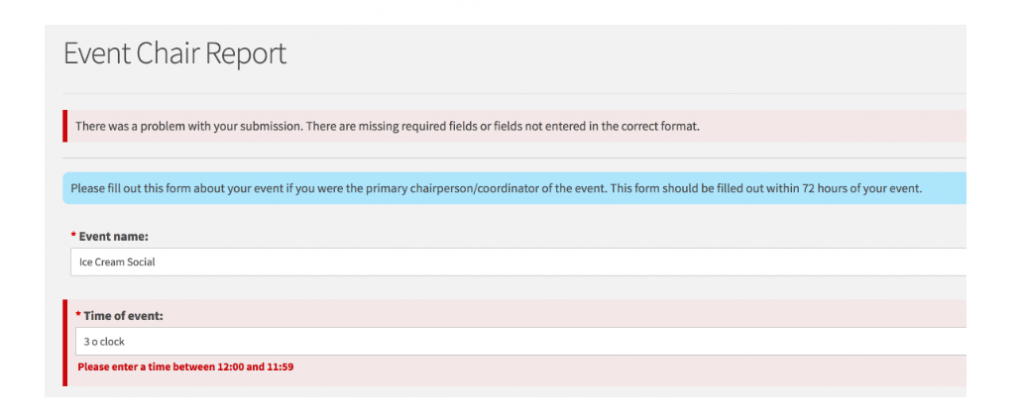

- Negative Testing: Include tests with intentionally malicious input to verify your application’s resilience.

3. Collaboration & Remediation

- Security Expertise: Work closely with security specialists or team members familiar with potential attack vectors.

- Prioritization: Prioritize fixing critical vulnerabilities as soon as they’re discovered.

- Regular Updates: Keep security test suites updated to reflect new threats and changes in the application.

Interview Emphasis

- It’s Not a Replacement: Automated security tests augment, but don’t fully replace, dedicated penetration testing or security audits.

- Risk-Based Approach: I’d stress the importance of tailoring the level of security testing to the specific application’s risk profile.

Additional Considerations

- Test Environment: If possible, consider isolated environments dedicated to security testing.

- False Positives: Be prepared to handle and triage potential false positives reported by automated tools.

#40 What is your next step after identifying your automation test tool?

“Selecting the tool is a crucial first step, but I see it as the foundation for a successful automation strategy. My next actions focus on ensuring the tool’s effective use and maximizing returns:

-

Proof of Concept (POC): I’d start with a targeted pilot on a small, representative part of the application. This allows me to:

- Validate the Tool: Confirm it aligns with our technical stack and addresses our key pain points.

- Team Buy-In: Demonstrate the tool’s potential to stakeholders and get early feedback.

-

Framework Design: While the tool provides capabilities, I’d outline a robust framework around it:

- Standards & Patterns: Define best practices for script creation, data management, reporting, etc.

- Scalability: Plan for how the framework will grow with the complexity of our test suite.

- Maintainability: Prioritize code organization and reusability to ease future maintenance.

-

Team Training & Adoption:

- Knowledge Transfer: If I wasn’t the sole person evaluating the tool, I’d share my findings and lessons learned with the wider testing team.

- Skill Development: Plan workshops or hands-on exercises, especially if team members lack experience with the chosen tool.

- Mentorship: Offer ongoing support to encourage adoption and address questions.

-

Integration & Optimization:

- CI/CD: Aim for seamless integration into our development pipeline to provide rapid feedback.

- Test Environment Alignment: Ensure the tool works reliably with our staging and testing environments.

-

Metrics & Refinement:

- Beyond Execution Reports: Establish KPIs like time saved vs. manual testing, bugs found early, etc., to demonstrate the value of automation.

- Iterative Approach: Regularly assess the tool, framework, and processes, looking for areas for improvement.

Interview Emphasis

- Proactive Approach: You need to highlight that you don’t wait for everything to be handed to me. I take the initiative to build out the essential infrastructure for automation success.

- Team Player: Emphasize the importance of enabling the entire team and ensuring smooth adoption.

#41 What are the characteristics of a good test automation framework?

Core Characteristics

- Maintainability: Well-structured code, clear separation of concerns, and adherence to best practices make the framework easy to update as the application evolves.

- Scalability: It efficiently handles a growing test suite and increasing complexity without major overhauls.

- Reliability: Tests produce consistent results, minimizing false positives/negatives, to build trust in the automation.

- Reusability: Modular components and data-driven approaches allow the same test logic to be easily adapted to different scenarios.

- Efficiency: Tests run quickly, and the framework is optimized for test execution speed within the CI/CD pipeline.

Beyond the Basics

- Readability: Even non-technical team members should be able to grasp the high-level intent of tests.

- Robust Reporting: Provides clear insights into test outcomes, failures, and trends to enhance debugging and decision-making.

- Ease of Use: Testers (especially less experienced ones) should find it straightforward to create and maintain new test cases.

- Cross-Platform Support: Ideally, it can execute tests across various browsers, operating systems, and devices.

- Integration Capabilities: Seamlessly integrates with CI/CD tools, bug trackers, and other systems in the development ecosystem.

In an interview, I’d also stress:

- Context Matters: The “perfect” framework doesn’t exist. The ideal characteristics depend on the project’s specifics, the team’s skillsets, and available resources.

- Prioritization: While all characteristics are desirable, you may need to prioritize certain ones (e.g., maintainability over lightning-fast execution speed) during the initial build-out.

#42 How do you handle localization and internationalization testing using automation tools?

Understanding the Concepts

- Internationalization (i18n): Designing software from the ground up to adapt to different languages, regions, and cultural conventions.

- Localization (l10n): The process of actually adapting the software to a specific target locale.

My Automation Strategy

-

Test Case Focus:

- Text Translation: Verify translated UI elements display correctly without truncation or overlap

- Date/Time: Check adherence to local formats, and correct time zone adjustments.

- Currency & Number Formatting: Ensure these display according to the target region’s standards.

- Right-to-Left Support: Test UI layout and text flow if supporting RTL languages.

- Regulatory Differences: Adapt tests for locale-specific legal requirements (e.g., data privacy).

-

Tool Selection & Preparation:

- Frameworks with i18n Support: Selenium, Appium, and others offer features or can be extended to facilitate these tests.

- Resource Bundles: Ensure proper loading and switching of locale-specific text and data.

-

Data-Driven Approach:

- Data Sets: Maintain data sets for each locale (text strings, dates, currencies, etc.).

- Parameterized Tests: Write test cases that iterate through these data sets.

-

Collaboration & Reporting:

- Contextual Experts: Work with native speakers or regional experts for cultural correctness.

- Feedback Channels: Establish clear reporting for subjective elements requiring manual review.

Interview Points

- Challenges:You have to acknowledge that fully automating cultural appropriateness is difficult. Hybrid approaches are essential.

- Tool Limitations: Not all tools are created equal; you need to mention that research is the best fit for the project.

#43 What is the main reason for testers to refrain from automation? How can they overcome it?

Reasons for Hesitation

- Upfront Investment: Significant time commitment for tool setup, framework creation, and initial test scripting.

- Skill Gaps: Lack of programming knowledge or experience with specific automation tools.

- Maintenance Overhead: Perceived notion that automated tests are difficult to update as the application changes.

- Rapidly Changing UI: Automation might feel futile in the face of frequent UI overhauls during early development phases.

Overcoming the Challenges

- Demonstrate ROI: Focus on automating high-value, repetitive tests to showcase time savings and benefits.

- Training & Mentorship: Provide team members with resources and support to develop automation skills.

- Hybrid Approach: Leverage scriptless tools or record-and-playback features for a smoother transition.

- Modular Design: Emphasize best practices to build maintainable tests.

- Strategic Implementation: Start automation on stable areas of the application, scaling up as confidence grows.

#44 Name important modules of the automation testing Framework?

Core Components:

- Test Script Library: Houses the core test cases, built using your chosen programming language.

- Test Data Source: Manages input data, often separated into files (e.g., CSV, Excel, JSON) or integrated with a database.

- Object Repository: Centralizes UI element locators (especially for Page Object Model approaches) for efficient maintenance.

- Modular Functions: Reusable code blocks for common actions (login, navigation, assertions, etc.).

- Test Configuration: Settings and parameters used by the framework (e.g., target environments, browser types).

Essential Support:

- Reporting Mechanism: Clear and structured test result reporting (integrations with reporting tools are often used).

- Logging: Records actions and errors for debugging.

Advanced Additions (Depending on Context):

- CI/CD Integration: Scripts or plugins to trigger tests automatically as part of your development pipeline.

- Keyword/Data-Driven Layer: Optional abstractions to simplify test creation for less technical testers.

- Parallel Execution: Capabilities to run tests simultaneously for speed.

Interview Note:You need to emphasize that the ideal modules depend on project needs and team skills. I’m equally comfortable adapting to existing frameworks or designing them from scratch.

#45 What are the advantages of the Modular Testing framework?

Key Advantages

- Maintainability: Dividing tests into logical modules makes them easier to understand, update, and fix without affecting unrelated parts of the application.

- Reusability: Common functions or actions can be encapsulated in modules and reused across numerous test cases, saving development time and reducing code duplication.

- Scalability: Easy to add new test cases and expand the test suite by simply adding new modules, promoting growth alongside application development.

- Improved Readability: Smaller, focused modules enhance code readability and make the overarching test logic easier to grasp.

- Team Collaboration: Testers (even those with less technical expertise) can contribute by creating or maintaining modules that align with their domain knowledge.

Interview Emphasis

- Real-World Impact: I could briefly mention how using a modular framework in past projects saved significant time and effort in test maintenance and expansion.

- Beyond the Basics: I’d acknowledge that upfront planning and thoughtful design are essential to fully realize the benefits of modularity.

#46 What are the disadvantages of the keyword-driven testing framework?

Challenges with Keyword-Driven Testing

- Initial Overhead: There’s a steeper setup cost compared to basic scripted approaches. You need to define keywords, associated actions, and manage the keyword library.

- Technical Expertise: Creating and maintaining the framework often requires stronger programming skills than writing pure test scripts.

- Debugging: Troubleshooting failing tests can be more complex due to the added abstraction layer of keywords.

- Limited Flexibility: For highly intricate tests or custom scenarios, the keyword approach can feel restrictive compared to the full control of code-based scripting.

- Potentially Slower Development: At least for the initial test creation, the keyword approach might add slightly more time compared to directly coding.

Important Considerations:

- Context is Key: These disadvantages are most prominent in small-to-medium projects. For large, complex test suites, the maintainability gains often outweigh the initial challenges.

- Tool Support: Modern keyword-driven tools mitigate some complexity, offering visual interfaces and simpler keyword management.

Interview Emphasis

- Trade-offs:you need to stress the importance of weighing the investment in a keyword-driven framework against the expected long-term benefits in maintenance and potential tester accessibility.

- My Expertise: You need to show that you can work within a keyword-driven framework while being fully aware of both its strengths and limitations.

#47 Can we do automation testing without a framework? If yes, how?

Direct Scripting

- Coding Approach: Write test scripts directly in a programming language (Java, Python, etc.) using libraries like Selenium WebDriver for web browser interactions.

- Flexibility: Gives you full control over test structure, reporting, and custom integrations.

- Suitable for: Small-scale projects, teams with strong programming skills, or those focused on proof-of-concept testing.

Record-and-Playback Tools

- Simplified Creation: Many tools allow you to record user actions on a website and “play them back” as automated tests.

- Quick Start: Ideal for rapidly creating basic tests or for testers less familiar with coding.

- Warnings: Recorded tests lack the structure that a framework provides and can become brittle with UI changes.

Hybrid Approach

- Combining Strengths: Leverage record-and-playback for simpler tests and direct scripting for more complex scenarios.

- Pragmatic: Offers flexibility to balance ease of creation against long-term maintainability needs.

Considerations

- Test Data Management: Plan how you’ll handle test data (e.g., CSV files, data providers in your chosen language).

- Reporting: Either use built-in test runner reports or explore reporting libraries.

- Maintenance: Pay attention to code organization and modularity from the start to ease updates.

Interview Emphasis

- Adaptability: I’d showcase my ability to work both with or without a framework, choosing the best approach based on the project’s context.

- Growth Mindset: I’d express that even if starting without a framework, I’d look for patterns and opportunities to build reusable components that form the foundation of a future framework if the project demands it.

#48 Which tools are you well-acquainted with?

List out the tools you have used, however, make sure that you have experience in handling Selenium

Here are some interview questions based on Selenium automation tool

49. Can we automate CAPTCHA or RECAPTCHA?

The Short Answer:

Fully automating CAPTCHA/reCAPTCHA is inherently difficult, often undesirable, and goes against their purpose of preventing bots.

However, there are a few approaches with limitations:

Possible, but not Ideal Methods:

- Image Recognition: Some advanced OCR techniques attempt to decode CAPTCHA images, but their success rate is unreliable due to deliberate distortions.

- External Services: Paid services claim to solve CAPTCHAs, but they’re costly, ethically questionable, and often become ineffective as CAPTCHA providers evolve.

- Test Mode Bypass: During development, consider if your testing tools can disable CAPTCHA or leverage test keys provided by reCAPTCHA.

Better Strategies:

- API Testing: If possible, focus your automation on directly testing the underlying backend APIs protected by the CAPTCHA.

- Manual Intervention: For scenarios where the CAPTCHA must be part of the test flow, design tests to pause for manual CAPTCHA solving.

Interview Note: You need to emphasize that attempting to circumvent the core function of CAPTCHA/reCAPTCHA should be carefully considered in context with the specific application and its security needs.

#50 When do you go for manual rather than automated testing?

Exploratory tests, usability testing, ad-hoc testing, etc. require tester skills rather than technical skills. So these testing require manual intervention rather than automation.

#51 Can you discuss the integration of automation testing with defect management systems? How do you track and manage bugs detected during automated testing?

Absolutely! My approach would be

1. Choosing the Right Tool

- Dedicated Defect Management Systems: Tools like Jira, Bugzilla, or TestRail provide comprehensive issue tracking and workflow customization.

- Project Management Integrations: If your team extensively uses tools like Trello or Asana, explore their bug tracking capabilities or potential add-ons.

2. Seamless Integration