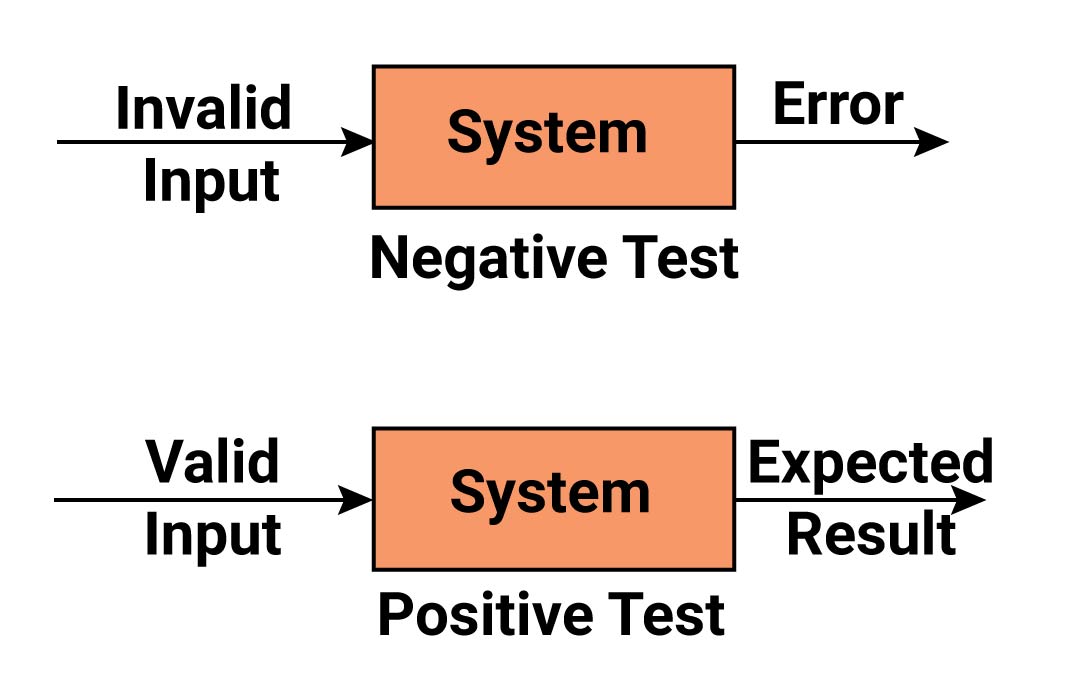

Effective software testing goes beyond confirming that an application functions as expected with valid inputs; it includes both positive and negative testing.

While positive testing ensures the system works correctly with valid inputs, negative testing explores how well the application handles invalid inputs and unexpected scenarios.

Remarkably, a substantial portion of test cases—approximately 85%—usually correspond to just 70% of the overall requirements. This emphasizes the significance of validating positive scenarios. However, the often overlooked 30% dedicated to failed negative value testing is equally crucial. This aspect ensures that the application exhibits robust behavior under unfavorable conditions and unexpected inputs.

This comprehensive approach, covering both positive and negative scenarios, contributes significantly to delivering a dependable and high-quality software product.

What Is Positive Testing With Example

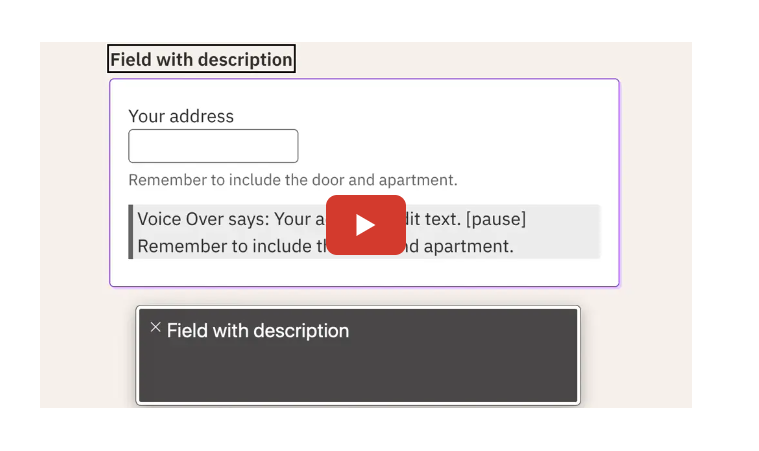

Positive testing involves validating an application’s functionality with valid inputs to ensure that it performs as expected. Testers do this by creating test cases based on predetermined outputs with the intention of confirming that the system accepts inputs for typical user use.

This type of testing is crucial and helpful for identifying vulnerabilities and ensuring the system’s resilience against inappropriate data.

For instance, consider a login functionality where a user is required to enter a username and password. In this scenario, positive testing would involve verifying that the system allows access with the correct combination of a valid username and password.

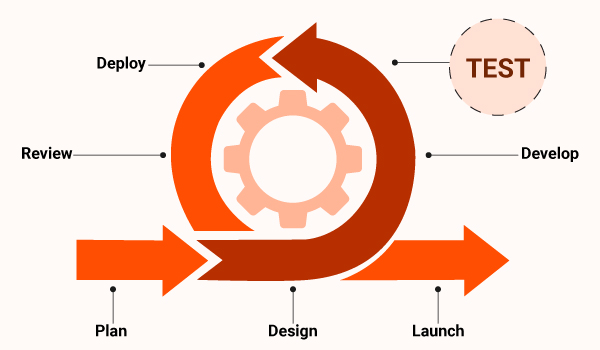

Positive testing not only ensures the system’s expected behavior but also aids in knowledge sharing regarding the system architecture throughout the Software Development Life Cycle (SDLC).Example of Positive Testing

The method is the same as that of negative testing. But here, instead of false data, valid data will be entered, and the expected result is the system accepting the code with no problem.

Example of the Positive Test Scenarios

- The password box should not accept less than 7 characters

- The password box should be up to 22 characters

- Password box should accept special characters up to 6-20 characters in length

Importance of Positive Testing

- Functionality Verification: At its core, positive testing is about making sure the software does what it’s supposed to do. It confirms that the basic features and user flows work as designed.

- Building Confidence: Successful positive tests give developers, stakeholders, and end-users confidence that the fundamental system works. This is crucial before moving on to more complex testing.

- Catching Early Errors: While focused on success, positive testing can still uncover major bugs or inconsistencies. Fixing these early is more efficient and cost-effective.

- Baseline for Further Testing: Positive tests establish a working baseline. If issues arise in later negative tests or other test types, you can refer back to see if core functionality has been affected.

- User Experience Focus: Positive testing aligns with how real users would interact with the software, ensuring the intended experience is smooth and functional.

Specific Benefits

- Improved Software Quality: Regular positive testing helps maintain quality standards across development cycles.

- Reduced Risk of Failure: By catching core functional issues early, you decrease the chance of major problems after release.

- Time Efficiency: Positive tests are often straightforward to design, making them a time-efficient way to verify essential system components.

- Positive User Perception: A well-functioning product due to thorough positive testing leads to satisfied users and positive brand reputation.

What Is Negative Testing?

Negative testing explores the system’s behavior when it is subjected to invalid inputs or unexpected conditions.

The objective of negative testing is to ensure that the system responds appropriately by displaying errors when necessary and not exhibiting errors in situations where it should not.

Negative testing is essential for uncovering vulnerabilities and testing scenarios that may not have been explicitly designed.

For instance, consider a scenario where a user is required to enter a password. Negative testing in this context would involve entering invalid inputs, such as passwords with special characters or exceeding the allowed character limit.

The purpose is simple – to test the system’s ability to handle unexpected inputs and scenarios that may arise during real-world usage.

Examples of Negative Testing

Filling up Required Fields – Imagine that there are fields in the website that require a user to fill it up. Negative testing can be done by feeding the box with negative inputs such as alphabets; either the webpage should show an error message or it should not accept the input.

Factors that need to be considered while performing a negative test

- Input data

- Action

- Output

Example of the Negative Test Scenarios

- Password box should not accept more than 7 characters

- Password box should not exceed 22 characters

- Password box should not accept special characters

Importance of Negative Testing

Forget about simply aiming to crash your application. True negative testing is about resilience and smart defense:

- Exposing Hidden Flaws: Many bugs lurk specifically in how the software reacts to the unexpected. Negative testing drags those out into the light where they can be fixed proactively.

- Bulletproofing Error Handling: A well-made app doesn’t just fall over when it gets strange input. Negative testing ensures it has clear error messages, ways to recover, and doesn’t leave users frustrated.

- Forging Security: Malicious users LOVE to poke at edges and find gaps. Negative tests simulate some of those attacks, helping you close security holes before they can be exploited.

The Real-World Impact

Think of users out there – they won’t always be perfect. Negative testing makes sure your software is ready for:

- Accidental Mistakes: Typos, missed fields, fat-fingered touches… negative testing ensures the app gracefully guides the user to correct these.

- Unconventional Thinking: Some people try things “outside the box.” Negative tests make sure the app doesn’t punish them and helps them get back on track.

- Unexpected Conditions: Internet flakiness, weird device settings – negative testing reveals if your app adapts instead of simply failing.

The Bottom Line for Testers

Not doing negative testing is like boxing training without ever sparring. Sure, they know the moves, but a real fight is messy. Negative tests get us ready for the real-world chaos users inevitably create, ensuring a robust, user-friendly experience.

Difference Between Positive and Negative Testing

Difference Between Positive and Negative Testing

While each type of testing has its own unique characteristics and features, as mentioned below

are some of the key differences between positive and negative testing

| Feature | Positive Testing | Negative Testing |

|---|---|---|

| Scope of Inputs | Focuses on testing a specific number of user inputs with a valid set of values. | Involves testing with excessive (load) inputs and an invalid set of values. |

| Perspective | Done with a positive point of view, ensuring that the system accepts valid inputs. | Approached with a negative point of view, testing for scenarios and inputs that are not designed. |

| Test Conditions | Identifies a known set of test conditions based on client requirements. | Conducted with an unknown set of test conditions, testing anything not mentioned in client requirements. |

| Password Test Example | Validates that the password test scenario accepts 6–20 characters, including alphanumeric values. | Ensures the password test scenario does not exceed 20 characters and does not accept special characters. |

Conclusion

Positive and negative testing are integral components of software testing, collectively working towards achieving a 100% reliable and quality application.

Positive testing ensures that the system performs as expected under normal circumstances, while negative testing explores how the system behaves when subjected to invalid inputs and unanticipated scenarios.

Therefore, it is important for organizations and testers to recognize the significance of both testing methodologies and incorporate them into their testing strategies to deliver bug-free software and enhance overall software quality.

By understanding and implementing positive and negative testing effectively, testers can contribute significantly to the development of robust and resilient software applications.

FAQs

How to write positive and negative test cases in selenium?

Writing positive and negative test cases in Selenium involves crafting scenarios that cover expected behaviors (positive) and potential failure scenarios (negative). Here are examples for both:

Positive Test Case:

Scenario: User Login with Valid Credentials

Test Steps:

- Open the application login page.

- Enter valid username.

- Enter valid password.

- Click on the “Login” button.

Expected Result:

- User should be successfully logged in.

- Verify that the user is redirected to the dashboard.

Selenium Code (Java):

public void testValidLogin() {

// Test steps to open login page, enter valid credentials, and click login

// Assert statements to verify successful login and redirection

}

Negative Test Case:

Scenario: User Login with Invalid Credentials

Test Steps:

- Open the application login page.

- Enter invalid username.

- Enter invalid password.

- Click on the “Login” button.

Expected Result:

- User should not be logged in.

- An error message should be displayed.

Selenium Code (Java):

public void testInvalidLogin() {

// Test steps to open login page, enter invalid credentials, and click login

// Assert statements to verify login failure and error message presence

}

In both cases, use assertions (e.g., Assert.assertEquals(), Assert.assertTrue()) to validate the expected outcomes. Make sure to handle synchronization issues using appropriate waits to ensure the elements are present or visible before interacting with them.

Remember, negative testing should cover various failure scenarios such as incorrect inputs, missing data, or unexpected behaviors.

FAQs

#1) What is the difference between positive testing and happy path testing?

Positive Testing

- Purpose: Verifies that the software behaves as expected when given valid inputs and conditions.

- Focus: Confirms that the core functionality of the system works under normal circumstances.

- Scope: Encompasses a wider range of test cases that involve correct inputs and anticipated user actions.

Happy Path Testing

- Purpose: Validates the most typical, successful flow of events through a system.

- Focus: Ensures the basic user journey functions without issues. Streamlines testing for the most common use case.

- Scope: A narrower subset of positive testing, focused on the primary “happy path” a user would take.

Key Differences

- Breadth: Positive testing casts a wider net, including variations in valid input and expected results. Happy path testing maintains a tight focus on the core, ideal user experience.

- Complexity: Happy path tests usually design simpler scenarios, while positive testing can explore more intricate edge cases and alternative paths.

Example

Consider testing a login form:

-

Positive Testing:

- Successful login with correct username and password.

- Successful login with case-insensitive username.

- Successful login after using “Forgot Password” functionality

-

Happy Path Testing:

- User enters correct username and password, clicks “Login,” and is successfully taken to their dashboard.

#2) Top 10 negative test cases

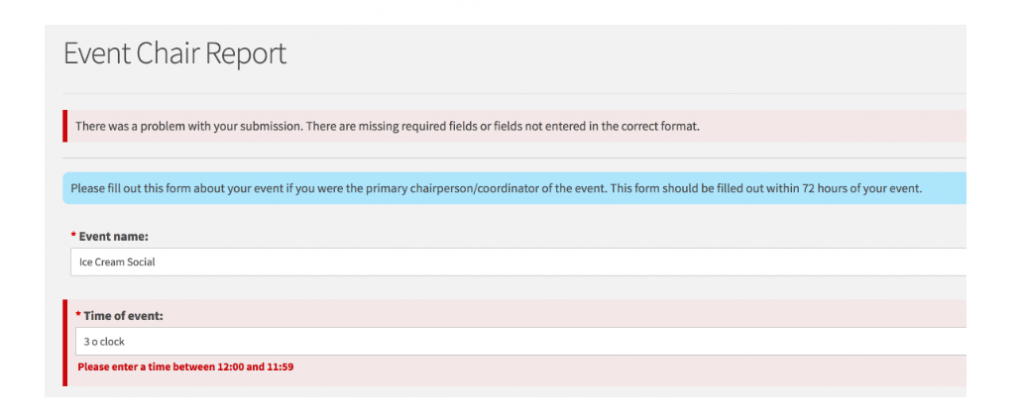

1. Invalid Data Format

- Test: Attempt to enter data in a format the field doesn’t accept.

- Example: Entering letters into a phone number field, or an invalid email address.

(Source)

2. Boundary Value Testing

- Test: Input values at the extremes of valid ranges.

- Example: If a field accepts numbers between 1-100, test with 0, 1, 100, and 101.

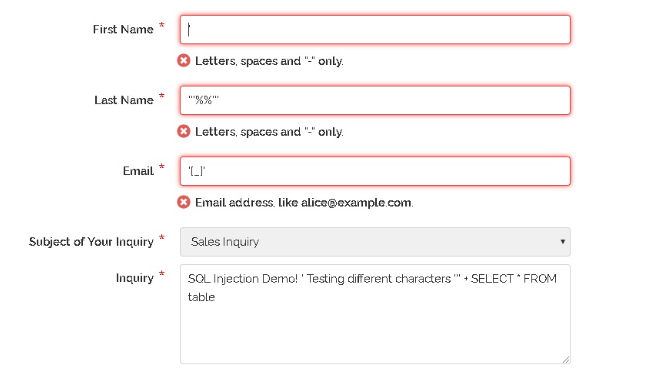

3. Entering Invalid Characters

- Test: Use special characters, SQL commands, or scripting tags in input fields.

- Example: Entering “<script>alert(‘XSS’)</script>” to test for cross-site scripting (XSS) vulnerabilities.

( Source )

4. Mandatory Field Omission

- Test: Leave required fields blank and try to proceed.

- Example: Submitting a signup form without filling in the username or password fields.

( Source )

5. Incorrect Data Combinations

- Test: Submit data where individual fields might be valid, but their combination isn’t.

- Example: Selecting a birth year in the future, or a shipping address in a different country than the selected billing country.

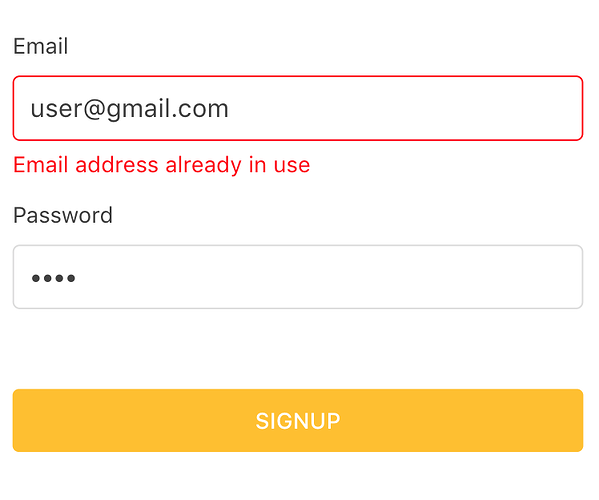

6. Duplicate Data Entry

- Test: Attempt to create records that are already present.

- Example: Registering with a username that already exists.

( Source )

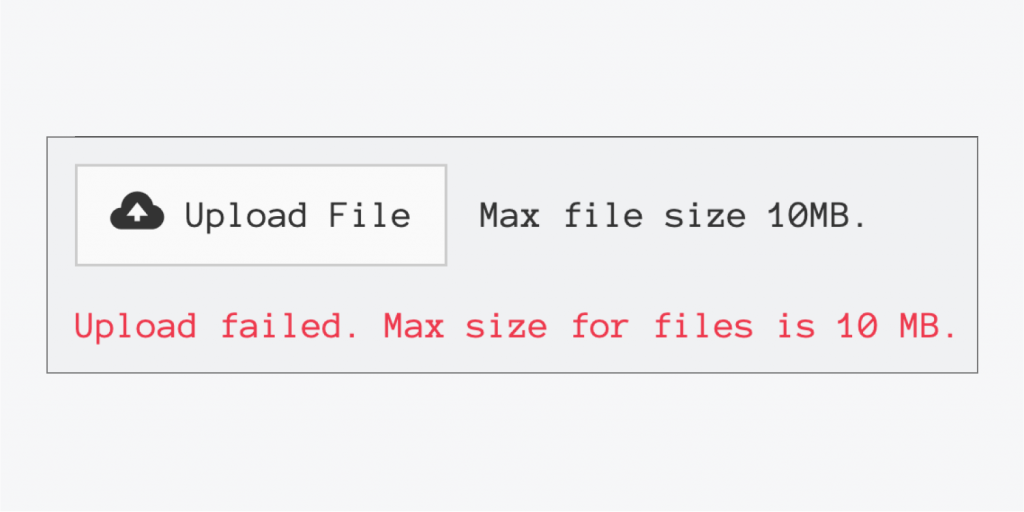

7. File Upload Errors

- Test: Try uploading files of unsupported types, incorrect sizes, or those containing malicious code.

( Source )

8. Interrupted Operations

- Test: Simulate actions like closing the browser, losing internet connection, or device power failures during a process.

- Example: Interrupting a large file download to see if it can resume correctly.

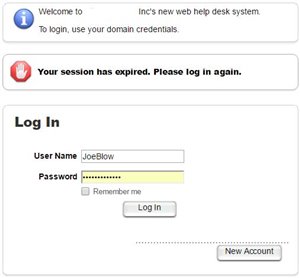

9. Session Expiration

- Test: Check if the application handles session timeouts gracefully, prompting users to re-authenticate or save their work.

( Source )

10. Excessive Data Input

-

- Test: Enter more data than the field can accommodate.

- Example: Pasting a huge block of text into a field with a character limit.