Let’s delve deep into the fascinating world of code analysis through statement coverage testing. From dissecting the significance of statement coverage testing to uncovering its practical applications, it’s advantages, disadvantages, along with relevant examples.

We’ll unravel how this technique helps ensure every line of code is scrutinized and put to the test. Whether you’re a seasoned developer or a curious tech enthusiast, this blog promises valuable insights into enhancing code quality and reliability.

Get ready to sharpen your testing arsenal and elevate your software craftsmanship!

What is Statement Coverage Testing?

A fundamental method of software testing called “statement coverage testing” makes sure that every statement in a piece of code is run at least once in order to gauge how thorough the testing was.

This method offers useful insights into how thoroughly a program’s source code has been checked by monitoring the execution of each line of code.

How to Measure Statement Coverage?

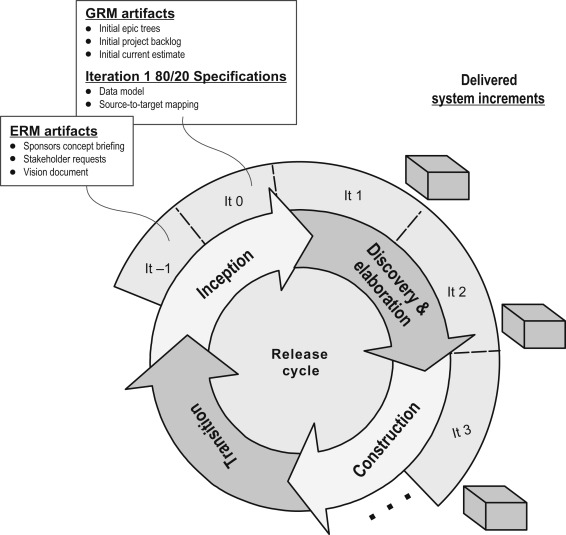

When comparing the number of executed statements to the total number of statements in the code, statement coverage is calculated. Statement coverage is calculated as follows:

|

Since this evaluation is given as a percentage, testers can determine what fraction of the code has really been used during testing.

Suppose we have a code snippet with 10 statements, and during testing, 7 of these statements are executed.

def calculate_average(numbers):

total = 0

count = 0

for num in numbers:

total += num

count += 1

if count > 0:

average = total / count

else:

average = 0

return average

In this case:

Number of Executed Statements: 7

Total Number of Statements: 10

Using the formula for statement coverage:

Statement Coverage = (Number of Executed Statements / Total Number of Statements) * 100%

Statement Coverage = (7 / 10) * 100% = 70%

Therefore, this code snippet’s statement coverage is 70%. This shows that during testing, 70% of the code’s statements were carried out.

To ensure a more thorough testing of the software, it’s critical to aim for higher statement coverage. In order to thoroughly evaluate the quality of the code, additional coverage metrics like branch coverage and path coverage are also essential.

Achieving 100% statement coverage, however, does not guarantee that all scenarios have been tested.

Example of Statement Coverage Testing:

Let’s consider a simple code snippet to illustrate statement coverage:

def calculate_sum(a, b):

if a > b:

result = a + b

else:

result = a – b

return result

Suppose we have a test suite with two test cases:

- calculate_sum(5, 3)

- calculate_sum(3, 5)

Both the ‘if ‘ and ‘else’ branches are executed when these test cases are applied to the function, covering all the code statements. The 100% statement coverage demonstrates that every statement in the code has undergone testing.

Statement coverage testing ensures that no lines of code are left untested and adds to the software’s overall stability.

It’s crucial to remember, though, that while it offers a basic level of coverage assessment, having high statement coverage doesn’t imply that there won’t be any errors or rigorous testing.

For a more thorough evaluation of code quality, other methods, like branch coverage and path coverage, may be required.

Advantages and disadvantages of statement coverage testing

Statement Coverage Testing Benefits/Advantages

Detailed Code Inspection:

Statement Coverage Testing makes sure that each line of code is run at least once during testing.

This facilitates the discovery of any untested code segments and guarantees a more thorough evaluation of the product.

Consider a financial application where testing statement coverage reveals that a certain calculation module has not been tested, requiring further testing to cover it.

Quick Dead Code Detection:

By immediately identifying dead or inaccessible code, statement coverage enables engineers to cut out superfluous sections.

For instance, statement coverage analysis can indicate the redundancy of a portion of code if it is left undisturbed during testing for an old feature.

Basic quality indicator:

High statement coverage indicates that a significant percentage of the code has been used during testing, according to the basic quality indicator.

It does demonstrate a level of testing rigor, but it does not ensure software that is bug-free. Achieving 90% statement coverage, for instance, demonstrates a strong testing effort within the software.

Statement Coverage Testing Disadvantages

Concentrate on Quantity Rather than Quality:

Statement coverage assesses code execution but not quality. With superficial tests that don’t account for many circumstances, a high coverage percentage may be achieved.

For instance, testing a login system can cover all of the code lines but exclude important checks for invalid passwords.

Ignores Branches and Logic:

When determining statement coverage, conditional structures like if and else statements are ignored.

This could result in inadequate testing of logical assumptions. Software might, for instance, test the “if” portion of an if-else statement but fail to test the “else” portion.

False High Coverage:

Achieving high statement coverage does not imply that the application will be bug-free.

Despite extensive testing, some edge situations or uncommon events might still not be tested.

For instance, a scheduling tool may have excellent statement coverage but neglect to take into account changes in daylight saving time.

Inability to Capture Input Context:

Statement coverage is unable to capture the context of the input values utilized during testing.

This implies that it might ignore particular inputs that result in particular behaviors.

For example, evaluating a shopping cart system might be successful if edge circumstances like negative amounts or large discounts are not taken into account.

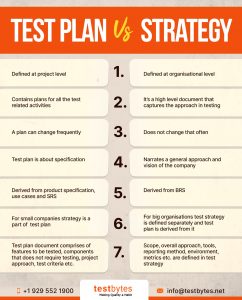

Difference Between Statement Coverage And Branch Coverage Testing

| Feature | Statement Coverage | Branch Coverage |

|---|---|---|

| Definition | Ensures every executable statement in the code is run at least once. | Ensures that every possible decision outcome (true/false) of each branch in the code is executed at least once. |

| Focus | Execution of code lines | Execution of decision paths |

| Example | If x > 10: <br> print("x is greater") |

If x > 10: <br> print("x is greater") <br> Else: <br> print("x is not greater") |

| Measures | Percentage of statements executed | Percentage of branches executed |

| Thoroughness | Less thorough | More thorough |

| When to Use | Early testing phases, as a baseline metric | Later testing phases for more comprehensive coverage |

FAQs

#1) How do you get 100% statement coverage?

Here’s how you achieve 100% statement coverage, explained in a clear and practical way:

Understanding Statement Coverage:

- The Goal: It means you’ve designed test cases that execute every single executable line of code in your project at least once. This doesn’t guarantee your code is bug-free, but it’s a foundational testing step.

Steps to Achieve 100% Statement Coverage:

-

Analyze the Code:

- Examine your code thoroughly to identify every executable statement. Pay close attention to conditional blocks (

if,else,switch), loops, and function calls.

- Examine your code thoroughly to identify every executable statement. Pay close attention to conditional blocks (

-

Design Targeted Test Cases:

- Create a test case for each execution path in your code. Think about the different input values and scenarios that will trigger every line.

- Example: If you have a simple

if x > 10condition, you need one test case wherexis greater than 10 and another where it’s less than or equal to 10.

-

Use a Coverage Tool:

- Coverage tools automate this process! They instrument your code and report which lines are executed during testing. This helps pinpoint areas missing coverage.

-

Iterate and Improve:

- With your tool’s help, identify lines not yet covered. Design new test cases to address these gaps. Continue this process until you hit 100%.

Important Considerations:

- Untestable Code: Sometimes, due to dependencies or complex interactions, certain statements may be impossible to hit in testing. Document these and explain the limitations.

- Beyond 100%: 100% statement coverage doesn’t mean perfect code. You’ll need more rigorous techniques like branch coverage and mutation testing for further confidence.

- Tool Choice: Research and select a coverage tool appropriate for your programming language and testing environment.

#2) Does 100% statement coverage mean 100% branch coverage?

Nope, 100% statement coverage doesn’t automatically mean 100% branch coverage. Think of it like this:

- Statement Coverage: You’ve walked down every street in a neighborhood.

- Branch Coverage: You’ve walked down every street AND made every possible turn (both left and right at intersections).

You could cover all the streets without taking every turn, missing some pathways!

#3) What is 100% coverage in software testing?

100% coverage in software testing is a bit of a misleading term. Here’s why:

It’s About Different Metrics:

- Statement Coverage: 100% means every executable line of code has been run at least once during testing.

- Branch Coverage: 100% means every possible outcome of each decision point (e.g.,

if/elsebranches) has been executed. - Path Coverage: 100% would be an incredibly difficult goal, as it means testing every possible combination of branches and paths through your code.

Why is the Term Used Loosely?

- Common Goal: People often desire high levels of coverage with their tests. The term “100% coverage” gets used as a shorthand for aiming for a very thorough testing process.

- Realistic Targets: In practice, most teams strike a balance between coverage and the time/effort required for different types of testing.

What Should You Focus On?

- Start with Statements: Statement coverage is a good baseline.

- Prioritize Branches: Branch coverage provides more confidence in your code’s logic.

- Context Matters: The right mix of coverage techniques depends on the criticality of your software and the types of risks you want to mitigate.

#4) What is 100% multiple condition coverage?

Here’s a breakdown of 100% multiple condition coverage (MCC):

What it is:

- A Rigorous Testing Standard: MCC is a type of coverage that focuses on thoroughly testing all possible combinations of outcomes within a decision that has multiple conditions.

- Example: Let’s say you have a decision based on whether

conditionAis true ANDconditionBis true. MCC requires test cases covering these scenarios:conditionA: True,conditionB: TrueconditionA: True,conditionB: FalseconditionA: False,conditionB: TrueconditionA: False,conditionB: False

Why it matters:

- Uncovers Subtle Bugs: Simple branch coverage might miss errors that only occur when specific combinations of conditions are met. MCC increases your chances of finding these.

- Especially for Complex Decisions: If your code has decisions with lots of combined conditions, MCC is crucial for ensuring the logic works as intended in all scenarios.

How to achieve 100% MCC

- Identify Decisions: Analyze your code to find decisions with multiple conditions.

- Truth Tables: Create truth tables to list all possible combinations of condition values (true/false).

- Design Test Cases: Create test cases that map to each row of your truth table, ensuring all combinations are executed.

- Coverage Tool: Use a coverage tool that supports MCC to track your progress and identify missing scenarios.

Important Notes:

- High Cost: MCC can be more time-consuming to achieve than statement or branch coverage due to the increased number of test cases.

- Best for Critical Systems: For software where safety or reliability are paramount (e.g., aviation, medical devices), this level of rigor is often justified.