Despite the several benefits test automation has to offer, many software testers still find excuses to not completely utilize these benefits. Faster releases, quicker feedback, frequent test execution, increased test coverage to development team are a few of the many advantages it possesses. Quite a few myths surround test automation and this blog will help you to identify them and embrace what it has to offer.

The most challenging task for a software tester when it comes to test automation is to understand its limitations and set goals accordingly.

Myths Surrounding Automated Testing

- Myth #1: It’s better than Manual Testing

- For those who claim this, you need to understand one thing; automated testing is not testing per se. It is checking of facts. When we have certain knowledge about a system under test, we enforce checks in the form of automated testing. The result of such a check will help confirm our understanding of the system.

However testing is a form of investigation, which gives us new information about the system under test. Hence we should refrain from being lenient to one or the other since both methods are required to get quality insight about an application.

- Myth #2: 100% Automated Testing

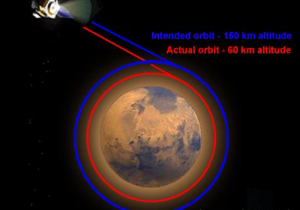

- 100% test coverage is impossible to achieve; and the same goes for test automation. While it is possible for us to increase test coverage by using more data, configurations, covering various operating systems and browsers, achieving 100% is an unrealistic goal.

More tests don’t mean better quality or confidence. The important thing is how good your test design is. Focus need to be put on the more important areas of functionality rather that chasing a full coverage.

- Myth #3: Quick ROI Every Time

- When implementing a test automation solution, the development of a framework is necessary and this will support operations. This can be useful and meaningful for test case selection, reporting, data driven, etc. The framework development should be considered as a project on its own and thus requires an array of skilled developers. The process is also a time consuming one.

Scripting automated checks takes longer initially even with a fully functional framework. Hence when it is necessary to provide a quick feedback on the new feature, checking it manually is faster.

- Myth #4: Automated Checks Have Higher Defect Detection Rate

- While it is true that vendor-supplied or home-made test automation solutions are highly capable of performing complex operations; they will never be able to replace a human software tester. He is capable of identifying even the most subtle anomalies in the application.

An automated check is capable of checking only what they were programmed to. Therefore the scripts are only as good as the person who wrote them. If not scripted properly the automation test can easily overlook major flaws in these applications. In short, checking can prove the presence of a defect, but not necessary its absence.

- Myth #5: Unit Test Automation is All That We Need

- It should be understood that a unit test is only capable of identifying programmer errors and not his failures. When all the components are tied together to form a system, a much larger aspect of testing comes into the limelight. Most organization has their automated checks at the system UI layer.

The sheer volatility of the functionalities during development makes the process of scripting automated checks a tedious task. Spending time on automation for a functionality that might change is not advisable and may cause difficulties in the later stages of development.

- Myth #6: System UI Automation is everything

- Relying solely on automated checks, especially at UI layer can have a truck-load of negative impacts. The development stage will face numerous changes in the UI in the form of enhanced visual design and usability. If a similar change in the functionality is not in place a false impression about the state of the application will be indicated in the checks.

Automation checks in the UI layer has a slower execution speed compared to the ones in the unit and API layers. This will result in a slower feedback process to the team. The root cause analysis takes longer as the exact location of the bug is unknown. Therefore it becomes necessary to identify the layers where the use of an automated test may become helpful.

Automated checks is not a onetime thing, it needs constant monitoring and updating. Above all you need to understand the limitations and set realistic goals to get the most out of you automated checks and most importantly your team.